Difference between revisions of "Control with TrackCam Vision Feedback and MATLAB"

| (3 intermediate revisions by the same user not shown) | |||

| Line 89: | Line 89: | ||

===Timing=== |

===Timing=== |

||

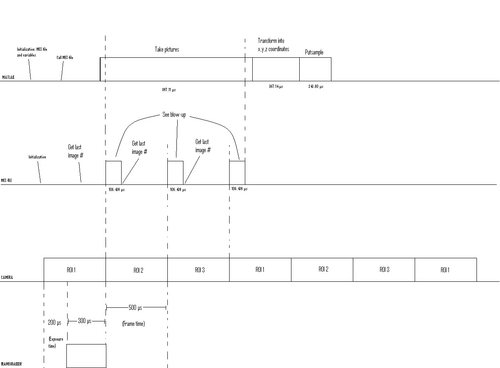

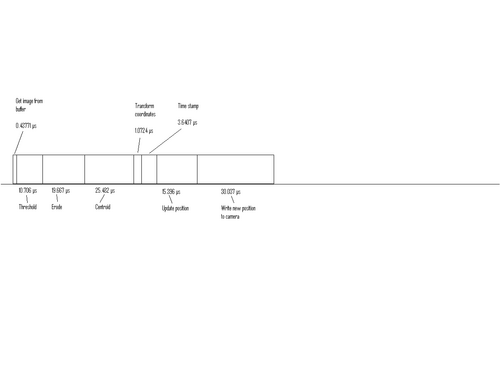

The timing of the system is detailed on the timing diagrams (Fig 5 and 6). The idea, which is not fully implemented yet, is to have there be two pictures already taken when the MATLAB program calls the MEX-file, so that the program waits for only one more picture to be taken and then grabs all three and processes them all. To implement this, the frame time has to be just a little shorter than half of the total processing time, including the MEX-file processing and the MATLAB processing. |

The timing of the system is detailed on the timing diagrams (Fig 5 and 6). The idea, which is not fully implemented yet, is to have there be two pictures already taken when the MATLAB program calls the MEX-file, so that the program waits for only one more picture to be taken and then grabs all three and processes them all. To implement this, the frame time has to be just a little shorter than half of the total processing time, including the MEX-file processing and the MATLAB processing. With a frame time of 500 microseconds, the system keeps up fine and can take pictures at 2000 frames per second. However, tweaking the frame time and exposure time to fit more neatly with processing time can make the system more efficient and perhaps even put the rate to over 2000 Hz, depending on how fast the processing can be done. |

||

[[image:TimingDiagram.jpg|thumb|500px|'''Figure 5:''' |

[[image:TimingDiagram.jpg|thumb|500px|'''Figure 5:''' Timing Diagram.|left]] |

||

[[image:TimingDiagramDetail.jpg|thumb|500px|'''Figure 6:''' |

[[image:TimingDiagramDetail.jpg|thumb|500px|'''Figure 6:''' Blowup of Timing Diagram.|right]] |

||

==Next Steps== |

|||

In the future, more things can be done to further speed up the system. First of all, using separate cores for the camera and processing may speed up the system as the two tasks would run in parallel. This would require shared memory. Another step that can be taken is to implement all the MATLAB computations in C. This would help because C is quicker than MATLAB and because MATLAB wouldn't have to access the MEX-file every time it wants to take a picture. Also, using a real-time operating system like Linux RTAI would also speed up the process. Stripping down Windows makes it close to real-time, but in reality it is not real-time. |

|||

==Code== |

==Code== |

||

| Line 796: | Line 798: | ||

===skeleton.cpp=== |

===skeleton.cpp=== |

||

<nowiki> |

|||

/** |

|||

* @file skeleton.cpp displays code for a simple one ROI tracker |

|||

* |

|||

* skeleton.cpp contains the necessary components to start and modify the vision system for |

|||

* application-specific tracking/image processing tasks. The code is comprised of three |

|||

* functions: set_initial_positions(...), display_tracking(...), and main(). |

|||

* |

|||

* set_initial_positions(...) shows one possible initialization strategy for the camera's |

|||

* ROI. This implementation simply takes the known initial position of the object to track |

|||

* (the blob) and centers the camera's ROI bounding box around that center. |

|||

* |

|||

* display_tracking(...) is a sample application of interest for showing (processed) camera |

|||

* frames. A function like this also provides visual feedback of what is going on |

|||

* internally in the system and is an invaluable debugging tool (see display_run.cpp for |

|||

* a more complicated example). |

|||

* |

|||

* Finally, main() is the starting point of the program. Its role is to initialize the |

|||

* camera and run the logic necessary for tracking objects. |

|||

* |

|||

* Most, if not all, applications will follow this recipe of initialization routines, |

|||

* application-specific routines, and finally a main program that sits in a loop acquiring |

|||

* the next image to process. A few common setup and helper functions can be found in |

|||

* utils.cpp. |

|||

*/ |

|||

#include "fcdynamic.h" |

|||

#include "constants.h" |

|||

#include "mex.h" |

|||

/** Grabs an image from the camera and displays the image on screen |

|||

* |

|||

* The purpose of this program is to show how to get the camera up and running. |

|||

* Modifications can be made by modifying the while(...) loop with different image |

|||

* processing and tracking logic. |

|||

*/ |

|||

// important variables used in most applications |

|||

int rc; |

|||

Fg_Struct *fg = NULL; |

|||

TrackingWindow cur[NUM_ROI]; |

|||

int index = 0; |

|||

int seq[] = SEQ; |

|||

int takedata = 0; // takedata has value 0 or 1 depending on whether data is being recorded |

|||

char key = '0'; // initialize command key |

|||

int timeMs; // variable for recording time in microseconds |

|||

int inittime; // initial time when data collection begins |

|||

int counter = 0; // Counts the number of images taken |

|||

FrameInfo timing; |

|||

IplImage *cvDisplay[NUM_ROI]; |

|||

// allocate resources |

|||

void initialization(void) { |

|||

// following lines are for displaying images only! See OpenCV doc for more info. |

|||

// they can be left out, if speed is important. |

|||

//IplImage *cvDisplay[NUM_ROI]; |

|||

#if DISPLAY_TRACKING |

|||

cvDisplay[0] = cvCreateImageHeader(cvSize(ROI_BOX_W0, ROI_BOX_H0), |

|||

BITS_PER_PIXEL, NUM_CHANNELS); |

|||

cvNamedWindow(DISPLAY0, CV_WINDOW_AUTOSIZE); |

|||

cvDisplay[1] = cvCreateImageHeader(cvSize(ROI_BOX_W1, ROI_BOX_H1), |

|||

BITS_PER_PIXEL, NUM_CHANNELS); |

|||

cvNamedWindow(DISPLAY1, CV_WINDOW_AUTOSIZE); |

|||

cvDisplay[2] = cvCreateImageHeader(cvSize(ROI_BOX_W2, ROI_BOX_H2), |

|||

BITS_PER_PIXEL, NUM_CHANNELS); |

|||

cvNamedWindow(DISPLAY2, CV_WINDOW_AUTOSIZE); |

|||

#endif |

|||

// initialize the tracking window (i.e. blob and ROI positions) |

|||

memset(&cur[0], 0, sizeof(TrackingWindow)); |

|||

set_initial_positions(&cur[0]); |

|||

memset(&cur[1], 0, sizeof(TrackingWindow)); |

|||

set_initial_positions2(&cur[1]); |

|||

memset(&cur[2], 0, sizeof(TrackingWindow)); |

|||

set_initial_positions3(&cur[2]); |

|||

// initialize the camera |

|||

rc = init_cam(&fg, MEMSIZE(cur[0].roi_w, cur[0].roi_h), NUM_BUFFERS, CAMLINK); |

|||

if(rc != FG_OK) { |

|||

printf("init: %s\n", Fg_getLastErrorDescription(fg)); |

|||

Fg_FreeGrabber(fg); |

|||

//return rc; |

|||

} |

|||

// start acquiring images (this function also writes any buffered ROIs to the camera) |

|||

rc = acquire_imgs(fg, (int *) &seq, SEQ_LEN); |

|||

if(rc != FG_OK) { |

|||

printf("init: %s\n", Fg_getLastErrorDescription(fg)); |

|||

Fg_FreeGrabber(fg); |

|||

//return rc; |

|||

} |

|||

// initialize parameters |

|||

//img_nr = 1; |

|||

int initnum; |

|||

QueryPerformanceFrequency(&timing.freq); |

|||

} |

|||

// de-allocate memory |

|||

void memclear(void) { |

|||

// free camera resources |

|||

rc = deinit_cam(fg); |

|||

if(rc != FG_OK) { |

|||

printf("deinit: %s\n", Fg_getLastErrorDescription(fg)); |

|||

//return rc; |

|||

} |

|||

} |

|||

// get the images and process them |

|||

void takePicture(double *coords) |

|||

{ |

|||

int i; |

|||

static int img_nr = 1; |

|||

// start a timer |

|||

QueryPerformanceCounter(&timing.grab_start); |

|||

// get number of last image acquired |

|||

img_nr = Fg_getLastPicNumberBlocking(fg, 1, PORT_A, TIMEOUT); |

|||

// stop timer |

|||

QueryPerformanceCounter(&timing.grab_stop); |

|||

// record image number |

|||

coords[12] = img_nr; |

|||

// record image number modulo 3 |

|||

coords[13] = img_nr%3; |

|||

// record time it took to acquire last image |

|||

coords[14] = (double) (timing.grab_stop.QuadPart - timing.grab_start.QuadPart)/timing.freq.QuadPart; |

|||

QueryPerformanceCounter(&timing.grab_start); |

|||

// camera has taken picture of the real ball |

|||

if (img_nr%3 == 1) { |

|||

cur[0].img = (unsigned char *) Fg_getImagePtr(fg, img_nr, PORT_A); |

|||

img_nr = Fg_getLastPicNumberBlocking(fg, img_nr+1, PORT_A, TIMEOUT); |

|||

cur[1].img = (unsigned char *) Fg_getImagePtr(fg, img_nr, PORT_A); |

|||

img_nr = Fg_getLastPicNumberBlocking(fg, img_nr+1, PORT_A, TIMEOUT); |

|||

cur[2].img = (unsigned char *) Fg_getImagePtr(fg, img_nr, PORT_A); |

|||

} |

|||

// camera has taken picture of the reflected ball |

|||

else if (img_nr%3 == 2) { |

|||

cur[1].img = (unsigned char *) Fg_getImagePtr(fg, img_nr, PORT_A); |

|||

cur[0].img = (unsigned char *) Fg_getImagePtr(fg, img_nr-1, PORT_A); |

|||

img_nr = Fg_getLastPicNumberBlocking(fg, img_nr+1, PORT_A, TIMEOUT); |

|||

cur[2].img = (unsigned char *) Fg_getImagePtr(fg, img_nr, PORT_A); |

|||

} |

|||

// camera has taken picture of the bar |

|||

else { |

|||

cur[2].img = (unsigned char *) Fg_getImagePtr(fg, img_nr, PORT_A); |

|||

cur[1].img = (unsigned char *) Fg_getImagePtr(fg, img_nr-1, PORT_A); |

|||

cur[0].img = (unsigned char *) Fg_getImagePtr(fg, img_nr-2, PORT_A); |

|||

} |

|||

QueryPerformanceCounter(&timing.grab_stop); |

|||

// record time to get images |

|||

coords[15] = (double) (timing.grab_stop.QuadPart - timing.grab_start.QuadPart)/timing.freq.QuadPart; |

|||

QueryPerformanceCounter(&timing.grab_start); |

|||

for (index=0;index<3;index++) { |

|||

// process image |

|||

threshold(&cur[index], THRESHOLD); |

|||

erode(&cur[index]); |

|||

// calculate centroid |

|||

centroid(&cur[index]); |

|||

// Transform coordinates from camera frame to realworld frame |

|||

if (index == 1) { |

|||

trans_coords2(&cur[index]); |

|||

} |

|||

else { |

|||

trans_coords(&cur[index]); |

|||

} |

|||

// create timestamp |

|||

timeMs = img_nr; |

|||

Fg_getParameter(fg, FG_TIMESTAMP, |

|||

&timeMs, PORT_A); |

|||

//printf("\n%d\n", time); |

|||

// updata position |

|||

position(&cur[index]); |

|||

// at this point position(...) has updated the ROI, but it only stores |

|||

// the updated values internal to the code. The next step is to flushq |

|||

// the ROI to the camera (see position(...) documentation). |

|||

// write ROI position to camera to be updated on frame "img_nr" |

|||

write_roi(fg, cur[index].roi, img_nr + 6, !DO_INIT); |

|||

// show image on screen |

|||

if (DISPLAY_TRACKING) { |

|||

display_tracking(&cur[index], cvDisplay[index]); |

|||

} |

|||

// record data for reflected ball image |

|||

if (index%3 == 1) { |

|||

coords[5] = (double) (timing.grab_stop.QuadPart - timing.grab_start.QuadPart)/timing.freq.QuadPart; |

|||

coords[3] = (double) cur[1].xc; |

|||

coords[4] = (double) cur[1].yc; |

|||

//printf("%f,%f,%f \n",coords[3],coords[4],coords[5]); |

|||

coords[10] = img_nr; |

|||

} |

|||

// record data for bar image |

|||

else if (index%3 == 2) { |

|||

coords[8] = (double) (timing.grab_stop.QuadPart - timing.grab_start.QuadPart)/timing.freq.QuadPart; |

|||

coords[6] = (double) cur[2].xc; |

|||

coords[7] = (double) cur[2].yc; |

|||

//printf("%f,%f,%f \n",coords[6],coords[7],coords[8]); |

|||

coords[11] = img_nr; |

|||

} |

|||

// record data for real ball image |

|||

else { |

|||

coords[2] = (double) (timing.grab_stop.QuadPart - timing.grab_start.QuadPart)/timing.freq.QuadPart; |

|||

coords[0] = (double) cur[0].xc; |

|||

coords[1] = (double) cur[0].yc; |

|||

//printf("%f,%f \n",cur[0].xc,cur[0].yc); |

|||

coords[9] = img_nr; |

|||

} |

|||

} |

|||

QueryPerformanceCounter(&timing.grab_stop); |

|||

// record time to process images |

|||

coords[16] = (double) (timing.grab_stop.QuadPart - timing.grab_start.QuadPart)/timing.freq.QuadPart; |

|||

// old method of executing this code, wherein each time the MEX-file is accessed, |

|||

// first one picture is taken and processed, then the next, and then the next. That |

|||

// method could be sped up with the technique that is employed now. I left the code |

|||

// in comment below just in case it is ever needed again. |

|||

/* |

|||

for (i=0;i<3;i++) { |

|||

QueryPerformanceCounter(&timing.grab_start); |

|||

img_nr = Fg_getLastPicNumberBlocking(fg, img_nr, PORT_A, TIMEOUT); |

|||

QueryPerformanceCounter(&timing.grab_stop); |

|||

// Obtain index for ROI based on image number |

|||

if (img_nr%3 == 1) { |

|||

index = 0; |

|||

} |

|||

else if (img_nr%3 == 2) { |

|||

index = 1; |

|||

} |

|||

else { |

|||

index = 2; |

|||

} |

|||

cur[index].img = (unsigned char *) Fg_getImagePtr(fg, img_nr, PORT_A); |

|||

// make sure that camera returned a valid image |

|||

if (cur[index].img == NULL) { |

|||

// typically this state only occurs if an invalid ROI has been programmed |

|||

// into the camera (e.g. roi_w == 4). |

|||

printf("img is null: %d\n", img_nr); |

|||

//system("PAUSE"); |

|||

//break; |

|||

} |

|||

// process image |

|||

threshold(&cur[index], THRESHOLD); |

|||

erode(&cur[index]); |

|||

// calculate centroid |

|||

centroid(&cur[index]); |

|||

// Transform coordinates from camera frame to realworld frame |

|||

if (index == 1) { |

|||

trans_coords2(&cur[index]); |

|||

} |

|||

else { |

|||

trans_coords(&cur[index]); |

|||

} |

|||

// create timestamp |

|||

timeMs = img_nr; |

|||

Fg_getParameter(fg, FG_TIMESTAMP, |

|||

&timeMs, PORT_A); |

|||

//printf("\n%d\n", time); |

|||

// updata position |

|||

position(&cur[index]); |

|||

// at this point position(...) has updated the ROI, but it only stores |

|||

// the updated values internal to the code. The next step is to flushq |

|||

// the ROI to the camera (see position(...) documentation). |

|||

// write ROI position to camera to be updated on frame "img_nr" |

|||

write_roi(fg, cur[index].roi, img_nr + 6, !DO_INIT); |

|||

// show image on screen |

|||

if (DISPLAY_TRACKING) { |

|||

display_tracking(&cur[index], cvDisplay[index]); |

|||

} |

|||

// increment to the next desired frame. This has to be at least |

|||

// +2, because the camera's ROI will not be active until the second |

|||

// frame (see Silicon Software FastConfig doc) |

|||

//img_nr += NEXT_IMAGE; |

|||

//QueryPerformanceCounter(&timing.grab_stop); |

|||

if (img_nr%3 == 2) { |

|||

coords[5] = (double) (timing.grab_stop.QuadPart - timing.grab_start.QuadPart)/timing.freq.QuadPart; |

|||

coords[3] = (double) cur[1].xc; |

|||

coords[4] = (double) cur[1].yc; |

|||

//printf("%f,%f,%f \n",coords[3],coords[4],coords[5]); |

|||

coords[10] = img_nr; |

|||

} |

|||

else if (img_nr%3 == 0) { |

|||

coords[8] = (double) (timing.grab_stop.QuadPart - timing.grab_start.QuadPart)/timing.freq.QuadPart; |

|||

coords[6] = (double) cur[2].xc; |

|||

coords[7] = (double) cur[2].yc; |

|||

//printf("%f,%f,%f \n",coords[6],coords[7],coords[8]); |

|||

coords[11] = img_nr; |

|||

} |

|||

else { |

|||

coords[2] = (double) (timing.grab_stop.QuadPart - timing.grab_start.QuadPart)/timing.freq.QuadPart; |

|||

coords[0] = (double) cur[0].xc; |

|||

coords[1] = (double) cur[0].yc; |

|||

//printf("%f,%f \n",cur[0].xc,cur[0].yc); |

|||

coords[9] = img_nr; |

|||

} |

|||

img_nr += NEXT_IMAGE; |

|||

} |

|||

*/ |

|||

} |

|||

// MEX function |

|||

void mexFunction( int nlhs, mxArray *plhs[], |

|||

int nrhs, const mxArray *prhs[]) |

|||

{ |

|||

int select; /* input scalar */ |

|||

double *outMatrix; /* output matrix */ |

|||

/* get the value of the selector */ |

|||

select = mxGetScalar(prhs[0]); |

|||

/* create the output matrix */ |

|||

plhs[0] = mxCreateDoubleMatrix(1,17,mxREAL); |

|||

/* get a pointer to the real data in the output matrix */ |

|||

outMatrix = mxGetPr(plhs[0]); |

|||

// choose which function to execute based on input from MATLAB |

|||

if (select == 0) { |

|||

initialization(); |

|||

} |

|||

else if (select == 1) { |

|||

takePicture(outMatrix); |

|||

} |

|||

else { |

|||

memclear(); |

|||

} |

|||

} |

|||

</nowiki> |

|||

Latest revision as of 16:01, 11 September 2009

Stripping Down Windows

To make the new computer that runs the control system run as efficiently as possible, all extraneous functionalities were turned off. There is not anti-virus software, no firewall, no https for the internet, and so on. Only the bare minimum is left. Below is a list of the remaining functions and what they do. Another thing to do to make MATLAB run faster is to set it to go to the Task Manager, go to Processes, right-click on MATLAB.exe and set it to Realtime priority.

Explorer.exe

- This is the user shell, which we see as the familiar taskbar, desktop, and other user interface features. This process isn't as vital to the running of Windows as you might expect, and can be stopped (and restarted) from Task Manager, usually with no negative side effects on other applications.

nvsvc32.exe

- Part of the NVIDIA graphics card drivers (Detonator). It should be left running on any system using Nvidia graphics hardware to ensure proper function.

MATLAB.exe

- MATLAB

svchost.exe

- an integral part of Windows OS. It cannot be stopped or restarted manually. This process manages 32-bit DLLs and other services. At startup, Svchost.exe checks the services portion of the registry and constructs a list of services that it needs to load. Under normal conditions, multiple instances of Svchost.exe will be running simultaneously. Each Svchost.exe session can contain a grouping of services, so that many services can be run depending on how and where Svchost.exe is started. This allows for better control and debugging.

lsass.exe

- the Local Security Authentication Server. It verifies the validity of user logons to your PC or server. Lsass generates the process responsible for authenticating users for the Winlogon service. This is performed by using authentication packages such as the default, Msgina.dll. If authentication is successful, Lsass generates the user's access token, which is used to launch the initial shell. Other processes that the user initiates then inherit this token.

services.exe

- This is the Services Control Manager, which is responsible for running, ending, and interacting with system services. Use this program to start services, stop them, or change their default from automatic to manual startup.

winlogon.exe

- runs in the background. Winlogon is a part of the Windows Login subsystem, and is necessary for user authorization and Windows XP activation checks.

csrss.exe

- This is the user-mode portion of the Win32 subsystem; Win32.sys is the kernel-mode portion. Csrss stands for Client/Server Run-Time Subsystem, and is an essential subsystem that must be running at all times. Csrss is responsible for console windows, creating and/or deleting threads, and implementing some portions of the 16-bit virtual MS-DOS environment.

smss.exe

- This is the session manager subsystem, which is responsible for starting the user session. This process is initiated by the main system thread and is responsible for various activities, including launching the Winlogon and Win32 (Csrss.exe) processes, and setting system variables. After it has launched these processes, it waits for either Winlogon or Csrss to end. If this happens normally, the system shuts down; if it happens unexpectedly, Smss.exe causes the system to stop responding (hang).

ctfmon.exe

- When you run a Microsoft Office XP program, the file Ctfmon.exe (Ctfmon) runs in the background, even after you quit all Office programs. Ctfmon.exe monitors active windows and provides text input service support for speech recognition, handwriting recognition, keyboard translation, and other alternate user input forms.

rundll32.exe

- This program is part of Windows, and is used to run program code in DLL files as if they were within the actual program.

RTHDCPL.EXE

- a process belonging to the Realtek HD Audio Control Panel and is bundled alongside Realtek sound cards and audio hardware. This program is a non-essential process, but should not be terminated unless suspected to be causing problems.

ipoint.exe

- a process installed alongside a Microsoft IntelliMouse and provides additional configuration options for these devices. "This program is a non-essential process, but should not be terminated unless suspected to be causing problems.

System

- a process which shows up on the tasks on mainly Windows XP, Windows 2003 server and later version of Windows. This is a default system counter and cannot be removed.

One of the methods explored to make the system run as quickly as possible was to have MATLAB and the camera running on separate cores of the computer, so both could run in parallel. This would require for them to communicate through shared memory. At the bottom of this page is a .cpp program that communicates through shared memory. We ultimately did not take this approach because Windows appears to be quick enough for now. Eventually, a real-time operating system such as Linux RTAI would be preferred, but for the time being, Windows suffices. I will therefore not explain further how the shared memory option works. However, if it is ever needed, everything to communicate through shared memory is in that file.

Test of Sample Rates

Overview

To see if using Windows instead of a real-time operating system would be a viable option, we had to test how fast the sampling rate could be set to without losing samples. We tried two methods: the first was to output data on one computer and collect it on the same computer, and the second was to output data on one computer and collect it on another computer.

Single Computer Tests

Pulses

To run these tests, we used Measurement Computing Cards (mcc). Both were installed in the same computer. The MATLAB code that is at the end of this document was then run (testInputOutput.m). It creates an output wave that is a wave of alternating pulses. These were output at a predetermined rate. The input card kept on taking input all the time. Then, the duration of the input pulses was measured to see how long each pulse actually was as opposed to how long we wanted it to be. Plots of this were made, along with the standard deviation and the extra duration of each pulse. Using this method, we were able to see how fast we could feasibly run the alternate pulses without losing accuracy. Figure 1 shows that this test started to break down around 2000 Hz.

Two Computers

Pulses

The next step was to output data on one computer and to input data on another. For output, we again used the mcc card. For input, however, we used a National Instruments (ni) card. Two programs were run for this test. The program run from the output computer was testOutput.m, and the one run on the input computer was testInputCard.m. With this simple modification to the setup, the test still ran fine at 10000 Hz, as can be seen in Figure 2.

Single Computer Again

Sine Wave

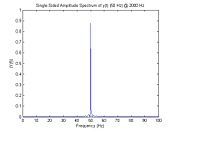

Following the tests with pulses, we decided to try to send a sine wave instead of a pulse, and to see at what output sample rate the frequency of the wave started to become inaccurate. This test is more practical, since in reality we would be sending sine waves and not pulses, and a little error in the output wave is acceptable in our applications. We used the same single computer setup as before, and ran the code inputOutputSineWave.m. A result of this test can be seen in Figure 3.

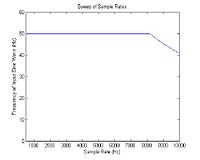

Sine Wave Sweep

Finally, we decided to try a sweep of sine wave frequencies to determine exactly where the input frequency breaks down. The code that was run is called sweepOfSampleRatesSineWave.m. The results of this can be seen in Figure 4. This breakdown occurred at 8100 Hz.

Integration of Systems

To begin

Make sure the National Instruments input and output cards are hooked up and set up with the new computer. On line 39 of the program “integrationTestPutSample.m”, make sure the right device number is chosen. As the program runs now, only the bottom two speakers are controlled, and the left and right speakers don’t move. Make sure the camera is calibrated properly. Run the .m file, and the ball should be bounced to an average height of 20 mm. This is a test of the closed feedback loop from the camera.

Contents of programs

The program consists of two separate programs. There is the MATLAB .m file, “integrationTestPutSample.m”, and the MEX-file, “skeleton.cpp”. The analysis of the data collected is done in MATLAB, where it can be seen in the beginning that a lot of matrices are set up to hold data. Then, the MATLAB program calls the MEX-file, which returns a lot of data, and that data is analyzed in MATLAB. The MEX-file itself takes the pictures and does image processing, calculating the centroid of each picture of the bouncing ball. All the functions in both the .m file and the .cpp file are documented within the programs.

Overview of operation of control system

First, the camera and the framegrabber are initialized by allocating space in memory for the images that will be taken. Then, the camera starts taking pictures at a pre-specified rate (FRAME_TIME in the constants.h file). The three most recent pictures (real ball, reflected ball, and bar) are then taken from the image buffer and the coordinates of each centroid are calculated with some image processing algorithms. Then, these coordinates are used as input to another function in MATLAB to calculate x, y, and z coordinates for the ball and x and z coordinates for the bar. After that, the program checks if the ball is below 14.5 mm from the bar. If it is, half a period of sine wave is input into the speakers, and the ball is bounced back up. If the previous bounce of the ball was above 21 mm, the amplitude of the sine wave is reduced a little. If the previous bounce was below 19 mm, the amplitude of the sine wave is increased a little. This keeps the ball bouncing to approximately 20 mm. This loop then runs for as long as is specified, and afterwards the space allocated for the images is de-allocated.

Timing

The timing of the system is detailed on the timing diagrams (Fig 5 and 6). The idea, which is not fully implemented yet, is to have there be two pictures already taken when the MATLAB program calls the MEX-file, so that the program waits for only one more picture to be taken and then grabs all three and processes them all. To implement this, the frame time has to be just a little shorter than half of the total processing time, including the MEX-file processing and the MATLAB processing. With a frame time of 500 microseconds, the system keeps up fine and can take pictures at 2000 frames per second. However, tweaking the frame time and exposure time to fit more neatly with processing time can make the system more efficient and perhaps even put the rate to over 2000 Hz, depending on how fast the processing can be done.

Next Steps

In the future, more things can be done to further speed up the system. First of all, using separate cores for the camera and processing may speed up the system as the two tasks would run in parallel. This would require shared memory. Another step that can be taken is to implement all the MATLAB computations in C. This would help because C is quicker than MATLAB and because MATLAB wouldn't have to access the MEX-file every time it wants to take a picture. Also, using a real-time operating system like Linux RTAI would also speed up the process. Stripping down Windows makes it close to real-time, but in reality it is not real-time.

Code

#include <windows.h>

#include <stdio.h>

#include <conio.h>

#include <tchar.h>

#define BUF_SIZE 256

TCHAR szName[]=TEXT("Global\\MyFileMappingObject");

//TCHAR szMsg[]=TEXT("What's up (from the first process)?");

int szMsg;

int _tmain()

{

HANDLE hMapFile;

LPCTSTR pBuf;

int ch,image;

int coordinates[3];

hMapFile = CreateFileMapping(

INVALID_HANDLE_VALUE, // use paging file

NULL, // default security

PAGE_READWRITE, // read/write access

0, // max. object size

BUF_SIZE, // buffer size

szName); // name of mapping object

if (hMapFile == NULL)

{

_tprintf(TEXT("Could not create file mapping object (%d).\n"),

GetLastError());

return 1;

}

pBuf = (LPTSTR) MapViewOfFile(hMapFile, // handle to map object

FILE_MAP_ALL_ACCESS, // read/write permission

0,

0,

BUF_SIZE);

if (pBuf == NULL)

{

_tprintf(TEXT("Could not map view of file (%d).\n"),

GetLastError());

CloseHandle(hMapFile);

return 1;

}

for (szMsg=0;szMsg<5;szMsg++) {

image = szMsg*2;

coordinates[0] = image * 2;

coordinates[1] = image * 3;

coordinates[2] = image * 4;

CopyMemory((PVOID)pBuf, coordinates, (3*sizeof(int)));

_cputs( "Type 'Y' to increment counter: " );

do

{

ch = _getch();

ch = toupper( ch );

} while( ch != 'Y' );

_putch( ch );

_putch( '\r' ); // Carriage return

_putch( '\n' ); // Line feed

}

UnmapViewOfFile(pBuf);

CloseHandle(hMapFile);

return 0;

}

#include <windows.h>

#include <stdio.h>

#include <conio.h>

#include <tchar.h>

#pragma comment(lib, "user32.lib")

#define BUF_SIZE 256

TCHAR szName[]=TEXT("Global\\MyFileMappingObject");

int _tmain()

{

HANDLE hMapFile;

LPCTSTR pBuf;

int i;

double coordinates[3];

int temp;

const char *tempChar;

hMapFile = OpenFileMapping(

FILE_MAP_ALL_ACCESS, // read/write access

FALSE, // do not inherit the name

szName); // name of mapping object

if (hMapFile == NULL)

{

_tprintf(TEXT("Could not open file mapping object (%d).\n"),

GetLastError());

return 1;

}

pBuf = (LPTSTR) MapViewOfFile(hMapFile, // handle to map object

FILE_MAP_ALL_ACCESS, // read/write permission

0,

0,

BUF_SIZE);

if (pBuf == NULL)

{

_tprintf(TEXT("Could not map view of file (%d).\n"),

GetLastError());

CloseHandle(hMapFile);

return 1;

}

tempChar = (const char *) pBuf;

temp = atoi(tempChar);

do {

for (i=0; i<3; i++){

*(coordinates+i) = (double) (atoi((const char *) (&(*(pBuf+i)))));

}

} while (1);

UnmapViewOfFile(pBuf);

CloseHandle(hMapFile);

return 0;

}

testInputOutput.m

clear all

close all

% set a desired sample rate of output

sampRate = 5000;

dt = 1/sampRate;

amp = 1;

% set input card's sample rate

sampRateIn = 50000;

% amount of time to acquire data

timeIn = 10;

trigger = timeIn*sampRateIn;

% creat input and output objects

AI = analoginput('mcc',0);

AO = analogoutput('mcc',1);

% add channels

addchannel(AI,[0:5],[1:6]);

addchannel(AO,[0:5],[1:6]);

% set up cards

set(AI,'SampleRate',sampRateIn)

set(AI,'SamplesPerTrigger',trigger)

set(AO,'SampleRate',ceil(1/500))

time = 1/sampRateIn:1/sampRateIn:timeIn;

t = 0:dt:timeIn;

% create an output wave that is just an alternating pulse

outputwave = zeros(length(t),1);

for jj = 1:floor(length(t)/2)

outputwave(2*jj,1) = amp;

end

outputwave(:,2) = outputwave;

outputwave(:,[2 3 4 5 6]) = 0;

temp = rand(10);

% start acquisition

start(AI)

tic

a = 0;

% output data

for ii = 1:length(outputwave)

while(toc<(dt*ii))

end

putsample(AO,outputwave(ii,:));

%z = sampleMexFile(ii,ii,ii);

%fft(outputwave);

%fprintf('pause: %f\n',dt-time);

%data(ii,:) = getsample(AI);

end

% collect data

data = getdata(AI);

stop(AI);

delete(AI)

clear AI

% get meaningful data from the channel that matters

meaningfulData = data(:,1);

plot(time,meaningfulData);

freqData = zeros(trigger,1);

counter = 0;

pulseCounter = 1;

% count number of pulses that have a specific duration

for ii = 2:trigger

if (abs(meaningfulData(ii) - meaningfulData(ii-1)) < .05)

counter = counter + 1;

elseif ((counter ~= 0) && (ii-counter > 3))

freqData(counter) = freqData(counter) + 1;

pulseDuration(pulseCounter) = counter/sampRateIn;

counter = 0;

pulseCounter = pulseCounter + 1;

elseif (ii-counter == 3)

counter = 0;

end

end

average = 1/sampRate;

figure

% create a graph of pulse durations

stem(time(1:2*average*sampRateIn),freqData(1:2*average*sampRateIn));

title(['Number of Pulses with Given Duration, Recorded for ',num2str(timeIn),' Seconds (',num2str(sampRate),' Hz)']);

xlabel('Pulse Duration (sec)');

ylabel('Number of Pulses');

averageDuration = 0;

for jj = 1:trigger

if (freqData(jj) ~= 0)

fprintf('%u pulses had duration of: %f \n',freqData(jj),jj/sampRateIn);

averageDuration = averageDuration + (freqData(jj)*jj/sampRateIn);

end

end

fprintf('\n');

averageDuration = averageDuration/sum(freqData);

fprintf('Average duration of pulses: %f \n\n',averageDuration);

standardDev = 0;

% find the standard deviation

for jj = 1:trigger

if (freqData(jj) ~= 0)

for kk = 1:freqData(jj)

standardDev = standardDev + (averageDuration - (jj/sampRateIn))^2;

end

end

end

standardDev = sqrt(standardDev/(sum(freqData) - 1));

fprintf('Standard deviation of duration of pulses: %f \n\n\n',standardDev);

pulseDuration = pulseDuration - mode(pulseDuration);

figure

% plot how much longer or shorter pulses were than what was requested

stem(pulseDuration/dt);

title('Pulse Duration vs. Time')

xlabel('Time (sec)')

ylabel(['Extra length of pulse in terms of dt (',num2str(dt),' seconds)']);

testOutput.m

clear all

close all

sampRate = 5000;

dt = 1/sampRate;

freq = 30;

timeIn = 30;

amp = 1;

AO = analogoutput('mcc',1);

addchannel(AO,[0:5],[1:6]);

set(AO,'SampleRate',ceil(1/500))

t = 0:dt:timeIn;

outputwave = zeros(length(t),1);

for jj = 1:floor(length(t)/2)

outputwave(2*jj,1) = amp;

end

outputwave(:,2) = outputwave;

outputwave(:,[2 3 4 5 6]) = 0;

tic

for ii = 1:length(outputwave)

while(toc<(dt*ii))

end

putsample(AO,outputwave(ii,:));

%z = sampleMexFile(ii,ii,ii);

%fft(outputwave);

%fprintf('pause: %f\n',dt-time);

%data(ii,:) = getsample(AI);

end

testInputCard.m

clear all

close all

dt = 1/500;

freq = 30;

sampRateIn = 50000;

trigger = 100000;

time = 1/sampRateIn:1/sampRateIn:trigger/sampRateIn;

AI = analoginput('mcc',0);

addchannel(AI,[0:5],[1:6]);

set(AI,'SampleRate',sampRateIn)

set(AI,'SamplesPerTrigger',trigger)

start(AI)

data = getdata(AI);

meaningfulData = data(:,1);

plot(time,meaningfulData);

freqData = zeros(trigger,1);

counter = 0;

for ii = 2:trigger

if (abs(meaningfulData(ii) - meaningfulData(ii-1)) < .05)

counter = counter + 1;

elseif ((counter ~= 0) && (ii-counter > 3))

freqData(counter) = freqData(counter) + 1;

counter = 0;

elseif (ii-counter == 3)

counter = 0;

end

end

figure

stem(time,freqData);

for jj = 1:trigger

if (freqData(jj) ~= 0)

fprintf('%u pulses had duration of: %f \n',freqData(jj),jj/sampRateIn);

end

end

inputOutputSineWave.m

clear all

close all

sampRate = 200;

dt = 1/sampRate;

freq = 50;

amp = 1;

sampRateIn = 50000;

timeIn = 10;

trigger = timeIn*sampRateIn;

AI = analoginput('mcc',0);

AO = analogoutput('mcc',1);

addchannel(AI,[0:5],[1:6]);

addchannel(AO,[0:5],[1:6]);

set(AI,'SampleRate',sampRateIn)

set(AI,'SamplesPerTrigger',trigger)

set(AO,'SampleRate',ceil(1/500))

time = 1/sampRateIn:1/sampRateIn:timeIn;

t = dt:dt:timeIn;

outputwave = amp*sin(2*pi*freq*t);

outputwave = outputwave';

outputwave(:,2) = amp*sin(2*pi*freq*t);

outputwave(:,[3 4 5 6]) = 0;

start(AI)

tic

a = 0;

for ii = 1:length(outputwave)

while(toc<(dt*ii))

end

putsample(AO,outputwave(ii,:));

z = sampleMexFile(ii,ii,ii);

end

data = getdata(AI);

stop(AI);

delete(AI)

clear AI

meaningfulData = data(:,1);

plot(time,meaningfulData);

[YfreqDomain,frequencyRange] = positiveFFT(meaningfulData,sampRateIn);

positiveFFT = figure;

stem(frequencyRange,abs(YfreqDomain));

xlabel('Freq (Hz)')

ylabel('Amplitude')

title('FFT')

grid

axis([0,2*freq,0,amp])

NFFT = 2^nextpow2(length(meaningfulData)); % Next power of 2 from length of y

Y = fft(meaningfulData,NFFT)/length(meaningfulData);

f = sampRateIn/2*linspace(0,1,NFFT/2+1);

figure

plot(f,2*abs(Y(1:NFFT/2+1)))

title(['Single-Sided Amplitude Spectrum of y(t) (',num2str(freq),' Hz) @ ',num2str(sampRate),' Hz'])

xlabel('Frequency (Hz)')

ylabel('|Y(f)|')

axis([0,2*freq,0,amp])

[a,b] = max(Y(1:NFFT/2+1));

actualFreq = f(b);

fprintf(['\nRequested frequency: ',num2str(freq), ' Hz\n']);

fprintf(['Actual frequency: ',num2str(actualFreq), ' Hz\n']);

sweepOfSampleRatesSineWave

clear all

close all

freq = 50;

amp = 1;

sampRateIn = 50000;

timeIn = 10;

trigger = timeIn*sampRateIn;

AI = analoginput('mcc',0);

AO = analogoutput('mcc',1);

addchannel(AI,[0:5],[1:6]);

addchannel(AO,[0:5],[1:6]);

set(AI,'SampleRate',sampRateIn)

set(AI,'SamplesPerTrigger',trigger)

set(AO,'SampleRate',ceil(1/500))

time = 1/sampRateIn:1/sampRateIn:timeIn;

sweep = zeros(100,3);

for sr = 500:100:10000

sampRate = sr;

dt = 1/sampRate;

t = 0:dt:timeIn;

outputwave = amp*sin(2*pi*freq*t);

outputwave = outputwave';

outputwave(:,2) = amp*sin(2*pi*freq*t);

outputwave(:,[3 4 5 6]) = 0;

start(AI)

tic

for ii = 1:length(outputwave)

while(toc<(dt*ii))

end

putsample(AO,outputwave(ii,:));

%fprintf('pause: %f\n',dt-time);

%data(ii,:) = getsample(AI);

end

data = getdata(AI);

stop(AI);

meaningfulData = data(:,1);

NFFT = 2^nextpow2(length(meaningfulData)); % Next power of 2 from length of y

Y = fft(meaningfulData,NFFT)/length(meaningfulData);

f = sampRateIn/2*linspace(0,1,NFFT/2+1);

% figure

% plot(f,2*abs(Y(1:NFFT/2+1)))

% title(['Single-Sided Amplitude Spectrum of y(t) (',num2str(freq),' Hz) @ ',num2str(sampRate),' Hz'])

% xlabel('Frequency (Hz)')

% ylabel('|Y(f)|')

% axis([0,2*freq,0,amp])

[a,b] = max(Y(1:NFFT/2+1));

actualFreq = f(b);

sweep(sr/100,1) = sr;

sweep(sr/100,2) = actualFreq;

end

figure

plot(sweep(:,1), sweep(:,2));

integrationTestPutSample.m

% This program is a simple control system to test the functionality of the

% camera and actuator closed-loop feedback system. The camera takes

% pictures at a predetermined rate (set in the MEX-file). This program

% accesses the MEX-file and takes the three most recent pictures from the

% image buffer. The coordinates of the centroid of each image are then

% calculated. These coordinates are passed back into this program, where

% the x, y, and z coordinates of the ball are computed and the x and z

% coordinates of the bar are computed. After that, the program checks if

% the ball is below 14.5 mm from the bar. If it is, half a period of a

% sine wave is input into the speakers, and the ball is bounced back up.

% If the previous bounce of the ball was above 21 mm, the amplitude of the

% sine wave is reduced a little. If the pervious bounce was below 19 mm,

% the amplitude of the sine wave is increased a little. This keeps the

% ball bouncing to approximately 20 mm. This loop then runs for as long as

% is specified, and afterwards the space allocated for the images is

% de-allocated.

clear all

close all

% load calibration constants

calib = '8-17-09 Calibration Constants';

load(calib);

% compile the MEX-file

mex -L'C:\PROGRA~1\SiliconSoftware3.2\lib\visualc' -L'C:\Program Files\OpenCV\lib' -ldisplay_lib -lclserme3 -lclsersisome3 -lfastconfig -lfglib3.1 -lfglib3 -liolib -lmenable3 -lhighgui -lcv -lcvaux -lcvcam -lcvhaartraining -lcxcore -lcxts -lml skeleton.cpp cam.cpp imgproc.cpp roi.cpp tracking.cpp utils.cpp

% initialize camera and framegrabber

skeleton(0);

% set the sample rate of the data input into the speakers

sampRate = 2000;

dt = 1/sampRate;

% set the frequency of the sine wave

freq = 30;

amp = 2;

timeOnePeriod = 1/freq;

% create an analog output object

AO = analogoutput('nidaq','Dev3');

% add 2 channels for the two bottom speakers

addchannel(AO,2:3);

% set a sample rate for the output (only matters is the command "putdata"

% is used).

set(AO,'SampleRate',sampRate);

t = 0:dt:timeOnePeriod/2;

% create output wave

outputwave = amp*sin(2*pi*freq*t);

outputwave = outputwave';

outputwave(:,2) = amp*sin(2*pi*freq*t);

% set the length of the loop

lenLoop = 10000;

% initialize vectors to store useful data

height_ball = zeros(1,lenLoop);

height_bar = zeros(1,lenLoop);

proportional = zeros(1,lenLoop);

multArray = zeros(1,lenLoop);

timeArray = zeros(2,lenLoop);

eventTimer = zeros(1,3*lenLoop);

imageNumber = zeros(1,3*lenLoop);

times = zeros(5,lenLoop);

% initialize useful variables

previousHeight = 0;

goingDown = 0;

time_ball(1) = 0;

multiplier = 2;

P = 13.8;

ii = 1;

% start actual control system

while ii<(lenLoop+1)

% access the MEX-file and store results in variable "temp"

temp = skeleton(1);

% use proper entries from "temp" to create inputs for the

% calc_coords3ROI function

XZ = [1 temp(3) temp(1) temp(2)];

YZ = [1 temp(6) temp(4) temp(5)];

bar_tracking = [1 temp(9) temp(7) temp(8)];

%eventTimer((3*ii)-2:(3*ii)) = [temp(3) temp(6) temp(9)];

%imageNumber((3*ii)-2:(3*ii)) = [temp(10) temp(11) temp(12)];

% store the image number, image number modulo 3, time to get first

% image number, time to get rest of images, and time to process images

times(:,ii) = [temp(13); temp(14); temp(15); temp(16); temp(17)];

% calculate coordinates

[XYZ_BALL, XZ_BAR] = calc_coords3ROI(XZ,YZ,bar_tracking);

% check if bar has to bounce

if XYZ_BALL(5) > 13.9 && XYZ_BALL(5) < 14.5

% check if ball bounced too high

if P > 21

% adjust amplitude of output wave

multiplier = multiplier - .1;

% check if ball bounced too low

elseif P < 19 && multiplier < 4

% adjust amplitude of output wave

multiplier = multiplier + .1;

end

tic

% load output wave into speakers

for kk = 1:length(outputwave)

while(toc<(dt*kk))

end

temp2 = toc;

putsample(AO,multiplier*outputwave(kk,:));

temp = skeleton(1);

XZ = [1 temp(3) temp(1) temp(2)];

YZ = [1 temp(6) temp(4) temp(5)];

bar_tracking = [1 temp(9) temp(7) temp(8)];

%eventTimer((3*ii)-2:(3*ii)) = [temp(3) temp(6) temp(9)];

%imageNumber((3*ii)-2:(3*ii)) = [temp(10) temp(11) temp(12)];

times(:,ii) = [temp(13); temp(14); temp(15); temp(16); temp(17)];

[XYZ_BALL, XZ_BAR] = calc_coords3ROI(XZ,YZ,bar_tracking);

timeArray(1,ii) = toc - temp2;

height_bar(ii) = XZ_BAR(4);

height_ball(ii) = XYZ_BALL(5);

ii = ii + 1;

if ii>(lenLoop-1)

break

end

end

timeArray(2,ii) = toc;

multArray(ii) = multiplier;

% if ball settles on lens, wait one second and then bounce it back up

elseif XYZ_BALL(5) < 13.9

tic

while XYZ_BALL(5) < 13.9

temp = skeleton(1);

XZ = [1 temp(3) temp(1) temp(2)];

YZ = [1 temp(6) temp(4) temp(5)];

bar_tracking = [1 temp(9) temp(7) temp(8)];

[XYZ_BALL, XZ_BAR] = calc_coords3ROI(XZ,YZ,bar_tracking);

if toc > 1

tic

for kk = 1:length(outputwave)

while(toc<(dt*kk))

end

putsample(AO,4*outputwave(kk,:));

temp = skeleton(1);

XZ = [1 temp(3) temp(1) temp(2)];

YZ = [1 temp(6) temp(4) temp(5)];

bar_tracking = [1 temp(9) temp(7) temp(8)];

[XYZ_BALL, XZ_BAR] = calc_coords3ROI(XZ,YZ,bar_tracking);

height_bar(ii) = XZ_BAR(4);

height_ball(ii) = XYZ_BALL(5);

ii = ii + 1;

end

end

end

end

% record the height of the previous bounce

if XYZ_BALL(5) < previousHeight && goingDown == 0

goingDown = 1;

P = previousHeight;

proportional(ii) = P;

elseif XYZ_BALL(5) > previousHeight && goingDown == 1

goingDown = 0;

end

previousHeight = XYZ_BALL(5);

height_ball(ii) = XYZ_BALL(5);

height_bar(ii) = XZ_BAR(4);

ii = ii + 1;

end

% de-allocate memory

skeleton(2);

% make plots of data

figure

plot(height_ball);

title('Height of Ball');

figure

stem(proportional);

title('Max Height of Each Bounce');

figure

stem(multArray);

title('Multiplier');

figure

plot(height_bar);

title('Height of Bar');

% figure

% plot(timeArray(1,:));

% figure

% stem(imageNumber,eventTimer);

% title('Time for Event');

% find average height of bounces

j = 1;

for i = 1:length(proportional)

if proportional(i) ~= 0

temporary(j) = proportional(i);

j = j+1;

end

end

mean(temporary)

% j = 1;

% for i = 1:length(eventTimer)/3

% temporary3(j) = mean(eventTimer((3*i)-2:(3*i)));

% j = j+1;

% end

% figure

% stem(temporary3);

% mean(temporary3)

skeleton.cpp

/**

* @file skeleton.cpp displays code for a simple one ROI tracker

*

* skeleton.cpp contains the necessary components to start and modify the vision system for

* application-specific tracking/image processing tasks. The code is comprised of three

* functions: set_initial_positions(...), display_tracking(...), and main().

*

* set_initial_positions(...) shows one possible initialization strategy for the camera's

* ROI. This implementation simply takes the known initial position of the object to track

* (the blob) and centers the camera's ROI bounding box around that center.

*

* display_tracking(...) is a sample application of interest for showing (processed) camera

* frames. A function like this also provides visual feedback of what is going on

* internally in the system and is an invaluable debugging tool (see display_run.cpp for

* a more complicated example).

*

* Finally, main() is the starting point of the program. Its role is to initialize the

* camera and run the logic necessary for tracking objects.

*

* Most, if not all, applications will follow this recipe of initialization routines,

* application-specific routines, and finally a main program that sits in a loop acquiring

* the next image to process. A few common setup and helper functions can be found in

* utils.cpp.

*/

#include "fcdynamic.h"

#include "constants.h"

#include "mex.h"

/** Grabs an image from the camera and displays the image on screen

*

* The purpose of this program is to show how to get the camera up and running.

* Modifications can be made by modifying the while(...) loop with different image

* processing and tracking logic.

*/

// important variables used in most applications

int rc;

Fg_Struct *fg = NULL;

TrackingWindow cur[NUM_ROI];

int index = 0;

int seq[] = SEQ;

int takedata = 0; // takedata has value 0 or 1 depending on whether data is being recorded

char key = '0'; // initialize command key

int timeMs; // variable for recording time in microseconds

int inittime; // initial time when data collection begins

int counter = 0; // Counts the number of images taken

FrameInfo timing;

IplImage *cvDisplay[NUM_ROI];

// allocate resources

void initialization(void) {

// following lines are for displaying images only! See OpenCV doc for more info.

// they can be left out, if speed is important.

//IplImage *cvDisplay[NUM_ROI];

#if DISPLAY_TRACKING

cvDisplay[0] = cvCreateImageHeader(cvSize(ROI_BOX_W0, ROI_BOX_H0),

BITS_PER_PIXEL, NUM_CHANNELS);

cvNamedWindow(DISPLAY0, CV_WINDOW_AUTOSIZE);

cvDisplay[1] = cvCreateImageHeader(cvSize(ROI_BOX_W1, ROI_BOX_H1),

BITS_PER_PIXEL, NUM_CHANNELS);

cvNamedWindow(DISPLAY1, CV_WINDOW_AUTOSIZE);

cvDisplay[2] = cvCreateImageHeader(cvSize(ROI_BOX_W2, ROI_BOX_H2),

BITS_PER_PIXEL, NUM_CHANNELS);

cvNamedWindow(DISPLAY2, CV_WINDOW_AUTOSIZE);

#endif

// initialize the tracking window (i.e. blob and ROI positions)

memset(&cur[0], 0, sizeof(TrackingWindow));

set_initial_positions(&cur[0]);

memset(&cur[1], 0, sizeof(TrackingWindow));

set_initial_positions2(&cur[1]);

memset(&cur[2], 0, sizeof(TrackingWindow));

set_initial_positions3(&cur[2]);

// initialize the camera

rc = init_cam(&fg, MEMSIZE(cur[0].roi_w, cur[0].roi_h), NUM_BUFFERS, CAMLINK);

if(rc != FG_OK) {

printf("init: %s\n", Fg_getLastErrorDescription(fg));

Fg_FreeGrabber(fg);

//return rc;

}

// start acquiring images (this function also writes any buffered ROIs to the camera)

rc = acquire_imgs(fg, (int *) &seq, SEQ_LEN);

if(rc != FG_OK) {

printf("init: %s\n", Fg_getLastErrorDescription(fg));

Fg_FreeGrabber(fg);

//return rc;

}

// initialize parameters

//img_nr = 1;

int initnum;

QueryPerformanceFrequency(&timing.freq);

}

// de-allocate memory

void memclear(void) {

// free camera resources

rc = deinit_cam(fg);

if(rc != FG_OK) {

printf("deinit: %s\n", Fg_getLastErrorDescription(fg));

//return rc;

}

}

// get the images and process them

void takePicture(double *coords)

{

int i;

static int img_nr = 1;

// start a timer

QueryPerformanceCounter(&timing.grab_start);

// get number of last image acquired

img_nr = Fg_getLastPicNumberBlocking(fg, 1, PORT_A, TIMEOUT);

// stop timer

QueryPerformanceCounter(&timing.grab_stop);

// record image number

coords[12] = img_nr;

// record image number modulo 3

coords[13] = img_nr%3;

// record time it took to acquire last image

coords[14] = (double) (timing.grab_stop.QuadPart - timing.grab_start.QuadPart)/timing.freq.QuadPart;

QueryPerformanceCounter(&timing.grab_start);

// camera has taken picture of the real ball

if (img_nr%3 == 1) {

cur[0].img = (unsigned char *) Fg_getImagePtr(fg, img_nr, PORT_A);

img_nr = Fg_getLastPicNumberBlocking(fg, img_nr+1, PORT_A, TIMEOUT);

cur[1].img = (unsigned char *) Fg_getImagePtr(fg, img_nr, PORT_A);

img_nr = Fg_getLastPicNumberBlocking(fg, img_nr+1, PORT_A, TIMEOUT);

cur[2].img = (unsigned char *) Fg_getImagePtr(fg, img_nr, PORT_A);

}

// camera has taken picture of the reflected ball

else if (img_nr%3 == 2) {

cur[1].img = (unsigned char *) Fg_getImagePtr(fg, img_nr, PORT_A);

cur[0].img = (unsigned char *) Fg_getImagePtr(fg, img_nr-1, PORT_A);

img_nr = Fg_getLastPicNumberBlocking(fg, img_nr+1, PORT_A, TIMEOUT);

cur[2].img = (unsigned char *) Fg_getImagePtr(fg, img_nr, PORT_A);

}

// camera has taken picture of the bar

else {

cur[2].img = (unsigned char *) Fg_getImagePtr(fg, img_nr, PORT_A);

cur[1].img = (unsigned char *) Fg_getImagePtr(fg, img_nr-1, PORT_A);

cur[0].img = (unsigned char *) Fg_getImagePtr(fg, img_nr-2, PORT_A);

}

QueryPerformanceCounter(&timing.grab_stop);

// record time to get images

coords[15] = (double) (timing.grab_stop.QuadPart - timing.grab_start.QuadPart)/timing.freq.QuadPart;

QueryPerformanceCounter(&timing.grab_start);

for (index=0;index<3;index++) {

// process image

threshold(&cur[index], THRESHOLD);

erode(&cur[index]);

// calculate centroid

centroid(&cur[index]);

// Transform coordinates from camera frame to realworld frame

if (index == 1) {

trans_coords2(&cur[index]);

}

else {

trans_coords(&cur[index]);

}

// create timestamp

timeMs = img_nr;

Fg_getParameter(fg, FG_TIMESTAMP,

&timeMs, PORT_A);

//printf("\n%d\n", time);

// updata position

position(&cur[index]);

// at this point position(...) has updated the ROI, but it only stores

// the updated values internal to the code. The next step is to flushq

// the ROI to the camera (see position(...) documentation).

// write ROI position to camera to be updated on frame "img_nr"

write_roi(fg, cur[index].roi, img_nr + 6, !DO_INIT);

// show image on screen

if (DISPLAY_TRACKING) {

display_tracking(&cur[index], cvDisplay[index]);

}

// record data for reflected ball image

if (index%3 == 1) {

coords[5] = (double) (timing.grab_stop.QuadPart - timing.grab_start.QuadPart)/timing.freq.QuadPart;

coords[3] = (double) cur[1].xc;

coords[4] = (double) cur[1].yc;

//printf("%f,%f,%f \n",coords[3],coords[4],coords[5]);

coords[10] = img_nr;

}

// record data for bar image

else if (index%3 == 2) {

coords[8] = (double) (timing.grab_stop.QuadPart - timing.grab_start.QuadPart)/timing.freq.QuadPart;

coords[6] = (double) cur[2].xc;

coords[7] = (double) cur[2].yc;

//printf("%f,%f,%f \n",coords[6],coords[7],coords[8]);

coords[11] = img_nr;

}

// record data for real ball image

else {

coords[2] = (double) (timing.grab_stop.QuadPart - timing.grab_start.QuadPart)/timing.freq.QuadPart;

coords[0] = (double) cur[0].xc;

coords[1] = (double) cur[0].yc;

//printf("%f,%f \n",cur[0].xc,cur[0].yc);

coords[9] = img_nr;

}

}

QueryPerformanceCounter(&timing.grab_stop);

// record time to process images

coords[16] = (double) (timing.grab_stop.QuadPart - timing.grab_start.QuadPart)/timing.freq.QuadPart;

// old method of executing this code, wherein each time the MEX-file is accessed,

// first one picture is taken and processed, then the next, and then the next. That

// method could be sped up with the technique that is employed now. I left the code

// in comment below just in case it is ever needed again.

/*

for (i=0;i<3;i++) {

QueryPerformanceCounter(&timing.grab_start);

img_nr = Fg_getLastPicNumberBlocking(fg, img_nr, PORT_A, TIMEOUT);

QueryPerformanceCounter(&timing.grab_stop);

// Obtain index for ROI based on image number

if (img_nr%3 == 1) {

index = 0;

}

else if (img_nr%3 == 2) {

index = 1;

}

else {

index = 2;

}

cur[index].img = (unsigned char *) Fg_getImagePtr(fg, img_nr, PORT_A);

// make sure that camera returned a valid image

if (cur[index].img == NULL) {

// typically this state only occurs if an invalid ROI has been programmed

// into the camera (e.g. roi_w == 4).

printf("img is null: %d\n", img_nr);

//system("PAUSE");

//break;

}

// process image

threshold(&cur[index], THRESHOLD);

erode(&cur[index]);

// calculate centroid

centroid(&cur[index]);

// Transform coordinates from camera frame to realworld frame

if (index == 1) {

trans_coords2(&cur[index]);

}

else {

trans_coords(&cur[index]);

}

// create timestamp

timeMs = img_nr;

Fg_getParameter(fg, FG_TIMESTAMP,

&timeMs, PORT_A);

//printf("\n%d\n", time);

// updata position

position(&cur[index]);

// at this point position(...) has updated the ROI, but it only stores

// the updated values internal to the code. The next step is to flushq

// the ROI to the camera (see position(...) documentation).

// write ROI position to camera to be updated on frame "img_nr"

write_roi(fg, cur[index].roi, img_nr + 6, !DO_INIT);

// show image on screen

if (DISPLAY_TRACKING) {

display_tracking(&cur[index], cvDisplay[index]);

}

// increment to the next desired frame. This has to be at least

// +2, because the camera's ROI will not be active until the second

// frame (see Silicon Software FastConfig doc)

//img_nr += NEXT_IMAGE;

//QueryPerformanceCounter(&timing.grab_stop);

if (img_nr%3 == 2) {

coords[5] = (double) (timing.grab_stop.QuadPart - timing.grab_start.QuadPart)/timing.freq.QuadPart;

coords[3] = (double) cur[1].xc;

coords[4] = (double) cur[1].yc;

//printf("%f,%f,%f \n",coords[3],coords[4],coords[5]);

coords[10] = img_nr;

}

else if (img_nr%3 == 0) {

coords[8] = (double) (timing.grab_stop.QuadPart - timing.grab_start.QuadPart)/timing.freq.QuadPart;

coords[6] = (double) cur[2].xc;

coords[7] = (double) cur[2].yc;

//printf("%f,%f,%f \n",coords[6],coords[7],coords[8]);

coords[11] = img_nr;

}

else {

coords[2] = (double) (timing.grab_stop.QuadPart - timing.grab_start.QuadPart)/timing.freq.QuadPart;

coords[0] = (double) cur[0].xc;

coords[1] = (double) cur[0].yc;

//printf("%f,%f \n",cur[0].xc,cur[0].yc);

coords[9] = img_nr;

}

img_nr += NEXT_IMAGE;

}

*/

}

// MEX function

void mexFunction( int nlhs, mxArray *plhs[],

int nrhs, const mxArray *prhs[])

{

int select; /* input scalar */

double *outMatrix; /* output matrix */

/* get the value of the selector */

select = mxGetScalar(prhs[0]);

/* create the output matrix */

plhs[0] = mxCreateDoubleMatrix(1,17,mxREAL);

/* get a pointer to the real data in the output matrix */

outMatrix = mxGetPr(plhs[0]);

// choose which function to execute based on input from MATLAB

if (select == 0) {

initialization();

}

else if (select == 1) {

takePicture(outMatrix);

}

else {

memclear();

}

}