Difference between revisions of "Machine Vision Localization System"

MatthewElwin (talk | contribs) |

|||

| (108 intermediate revisions by 6 users not shown) | |||

| Line 1: | Line 1: | ||

__TOC__ |

__TOC__ |

||

=Project Files= |

|||

*[[media:vision_system_localization_project.zip|'''Vision_system_localization_project.zip''']] '''(Swarm Consensus Estimation project files with source code)''' |

|||

*[[media:RGB_Machine_Vision_Localization_System.zip|'''RGB_Machine_Vision_Localization_System.zip''']] |

|||

** The RGB vision system follows the same setup process as the original Swarm Consensus vision system, so please follow the steps below |

|||

** Any specific requirements or changes to the original steps needed for the RGB vision system will be noted in the steps below |

|||

=Overview= |

=Overview= |

||

This is a machine vision system that tracks multiple targets and can send out the positions via the serial port. It was developed specifically for the [[Swarm_Robot_Project_Documentation|Swarm Robotics project]], but can be adapted for other uses. It is based upon the [[Indoor_Localization_System]], but has several enhancements and bug fixes. Refer to [[Indoor_Localization_System]] for a basic overview of the setup of the system and the workings of the |

This is a machine vision system that tracks multiple targets and can send out the positions via the serial port. It was developed specifically for the [[Swarm_Robot_Project_Documentation|Swarm Robotics project]], but can be adapted for other uses. It is based upon the [[Indoor_Localization_System|Indoor Localization System]], but has several enhancements and bug fixes. Refer to [[Indoor_Localization_System|Indoor Localization System]] for a basic overview of the setup of the system and the workings of the pattern identification algorithm. |

||

==Major Enhancements/Changes== |

==Major Enhancements/Changes== |

||

* The system will now mark the targets with an overlay and display coordinate data onscreen. |

* The system will now mark the targets with an overlay and display coordinate data onscreen. |

||

| Line 10: | Line 16: | ||

* The origin of the world coordinate is now in the middle, not the lower left corner. |

* The origin of the world coordinate is now in the middle, not the lower left corner. |

||

* The GUI displaying the camera frames is now full sized instead of a thumbnail. However, if your monitor isn't big enough, you can resize them. |

* The GUI displaying the camera frames is now full sized instead of a thumbnail. However, if your monitor isn't big enough, you can resize them. |

||

* The coordinates generated are now corrected to output with the center of mass of the robot, not of the LED light pattern |

|||

==Major Bug Fixes== |

==Major Bug Fixes== |

||

* Two major memory leaks fixed. |

* Two major memory leaks fixed. |

||

* Calibration |

* Calibration matrices are now calculated correctly. |

||

* File handling bug that causes an off-by-one error in '''LoadTargetData()''' fixed. |

* File handling bug that causes an off-by-one error in '''LoadTargetData()''' fixed. |

||

==Camera Calibration Routine== |

|||

The camera calibration routine used is explained in the document [[Media:Image_Formation_and Camera_Calibration.pdf | Image_Formation_and Camera_Calibration.pdf]] by Professor Ying Wu. |

|||

==Target Patterns== |

==Target Patterns== |

||

*[[media:TrackSysV1_6_20_08.zip|'''TrackSysV1_6_20_08.zip''']] '''(Target patterns and preprocessor)''' |

|||

The target patterns and preprocessor can be downloaded at [[Indoor_Localization_System#Pre-processing_program_source_with_final_patterns:]]. |

|||

**For actual images of the target patterns: '''TrackSysTraining(folder) >> images(folder) >> FinalSet(folder)>>1,2,3,...,24''' |

|||

=OpenCV Documentation= |

|||

[http://opencv.willowgarage.com/wiki/ OpenCV Wiki] |

|||

=Operation= |

=Operation= |

||

==Setting up the Cameras== |

==Setting up the Cameras== |

||

{| |

|||

[[Image:camera_setup.png|300px|thumb|left]] |

|||

| [[Image:camera_setup.png|375px|thumb|Camera setup]] |

|||

<br clear='all'> |

|||

| [[Image:machine_vision_single_frame.png|330px|thumb|Targets found in the margin are discarded]] |

|||

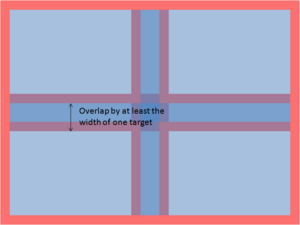

| [[Image:machine_vision_four_frames.png|300px|thumb|The frames must overlap by the width of one target]] |

|||

|} |

|||

The cameras are set up and operate as described in the [[Indoor_Localization_System|Indoor Localization System]] entry, but with one change: targets at the border of the camera frame are now discarded. This changes prevents the misidentification of patterns if one or more dots in the pattern fall off the screen, but it also means that there must be enough overlap that when the target is in the dead-zone of one camera, it is picked up by another camera. |

|||

The height of the cameras will determine how small your patterns can be before becoming indistinguishable. Changing the height also changes the focus, so be sure to manually adjust the focus of the cameras when the height is changed. |

|||

== How to Use The System == |

|||

=== Camera Setup === |

|||

[[Image:visual_localization_viewing_angle.jpg|right|thumb|300px|Approximate Viewing Angle of Logitech Cameras]] |

|||

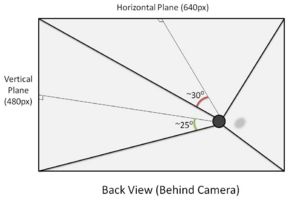

The four cameras used were standard Logitech QuickCam Communicate Deluxes. For future use, the videoInput library used is very compatible and works with most capture devices. As measured, the viewing angle (from center) of the Logitech cameras was around 30 degrees (horizontal plane) and 25 degrees (vertical plane). |

|||

Before attaching the cameras, several considerations must be made. |

|||

'''1. Choose a desired ALL WHITE area to cover.''' '''***IMPORTANT: If you want to be able to cover an continuous region, the images as seen by the cameras must overlap to ensure a target is at least fully visible in one frame of a camera***''' Keep in mind that there is a trade-off between area, and resolution. In addition, the size of the patterns will have to be increased above the threshold of noise. |

|||

* RGB Vision System: the floor color is trivial with the RGB vision system. It may be necessary to select a non-reflective/matte floor surface to reduce the number of aberrant reflections from the floor to the vision system |

|||

'''2. Ensure that the cameras are all facing the same direction. ''' As viewed from above, the "top" of the cameras should all be facing the same direction (N/S/E/W). For future use, if these directions must be variable, the image reflection and rotation parameters can be adjusted in software (though this has not been implemented). |

|||

'''3. Try to mount the cameras as "normal" as possible.''' Although the camera calibration should determine the correct pose information, keeping the lenses of the cameras as normal as possible will reduce the amount of noise at the edges of the images. |

|||

<br clear=all> |

|||

== Computer Setup == |

|||

In the current implementation, this system has been developed for a Windows based computer (as restricted by the videoInput library). The system should run in Windows XP or Vista. To setup the computer to develop and run the software, the three required libraries must be installed. |

|||

1. Download and install the Logitech QuickCam Communicate Deluxe Webcam Drivers - |

|||

Available at \\mcc.northwestern.edu\dfs\me-labs\lims\Swarms\LogitechDrivers.zip |

|||

or http://www.logitech.com/index.cfm/435/3057&cl=us,en. If you download them from the logitech website |

|||

you need BOTH versions of the software (qc1150 and lws110). These are available under downloads as |

|||

"Logitech webcam software with Vid", and "Quickcam v. 11.5". Install qc1150 first, and restart as requested. |

|||

Install lws110 (Note that you do not need the Vid software), and restart as requested. |

|||

Device Manager -> Imaging devices should display a list of 4 cameras. For each camera right click, |

|||

Choose update driver software, Browse my computer, Let me pick from a list, select QuickCam Communicate Deluxe Version: 11.5.0.1145 |

|||

Hit next to install the drivers. Do this for all 4 cameras before restarting. You now have the new software |

|||

(for controlling cameras individually), and the old drivers (for working with the vision system.). |

|||

2. Install Microsoft Visual Studio Professional (Express Edition is incompatible with some of the code) |

|||

3. Download and install Microsoft Windows Platform SDK - http://www.microsoft.com/downloads/details.aspx?FamilyId=0BAF2B35-C656-4969-ACE8-E4C0C0716ADB&displaylang=en |

|||

4. Download and install Microsoft DirectX 9+ SDK - http://www.microsoft.com/downloads/details.aspx?FamilyId=572BE8A6-263A-4424-A7FE-69CFF1A5B180&displaylang=en |

|||

5. Download and install Intel OpenCV Library - http://sourceforge.net/projects/opencvlibrary/ |

|||

6. Download and install the videoInput Library - http://muonics.net/school/spring05/videoInput/ |

|||

7. Download the source code for the vision system here: [[Media:vision_system_localization_project.zip|Vision System Localization Project]] |

|||

The project will probably not compile right after you open it. You will have to put the correct directories into the environment. In Visual C++, go to |

|||

Tools>Options>Project and Solutions>VC++ directories |

|||

and select '''Include files''' in the drop-down menu. Add '''(the directory paths on your computer may be different depending on your system, and the versions you installed.)''': |

|||

The cameras should be set up according to [[Indoor_Localization_System]] with one caveat: targets at the edge of the camera frame will now be discarded. This prevents misidentification of patterns if one or more dots in the pattern fall off the screen, but it also means that there must be enough overlap that when the target is in the dead-zone of one camera, it is picked up by another camera. |

|||

* C:\Program Files\OpenCV\otherlibs\highgui |

|||

* C:\Program Files\OpenCV\otherlibs\cvcam\include |

|||

* C:\Program Files\OpenCV\cvaux\include |

|||

* C:\Program Files\OpenCV\cxcore\include |

|||

* C:\Program Files\OpenCV\cv\include |

|||

* C:\Program Files\Microsoft SDKs\Windows\v6.1\Include |

|||

select '''Library files''' from the drop-down menu and add: |

|||

[[Image:machine_vision_single_frame.png|300px|thumb|left|Targets found in the margin are discarded.]] |

|||

* C:\Program Files\Microsoft SDKs\Windows\v6.1\Lib |

|||

<br clear='all'> |

|||

* C:\Users\LIMS\Documents\Visual Studio 2005\Libraries\videoInput0.1991\videoInputSrcAndDemos\libs\DShow\lib |

|||

[[Image:machine_vision_four_frames.png|300px|thumb|left|The frames must overlap; overlap by the width of one target to ensure that it will never be discarded by both cameras.]] |

|||

* C:\Users\LIMS\Documents\Visual Studio 2005\Libraries\videoInput0.1991\compiledLib\compiledByVS2005 |

|||

<br clear='all'> |

|||

* C:\Users\LIMS\Documents\Visual Studio 2005\Libraries\videoInput0.1991\videoInputSrcAndDemos\libs\videoInput |

|||

==Starting the Program== |

==Starting the Program== |

||

# Compile and run the program. |

|||

# When you first run the program, it will open up a console window and ask you to enter a COM port number. This is the number of the serial port that will be used to send out data. Enter the number, and press Enter. (e.g. <tt>Enter COM number: 4<enter></tt>) |

|||

# When you first run the program, it will open up a console window and ask you to enter a COM port number. This is the number of the serial port of the Xbee radio that will be used to send out data. Enter the number, and press Enter. (e.g. <tt>Enter COM number: 1<enter></tt>) |

|||

#* Be sure to configure the XBee radio appropriately. You can find instructions [[Swarm_Robot_Project_Documentation#Configuration_for_base_station.2Fdata_logger_XBee_radios|here]] |

|||

# The program should now connect to the cameras, and will open two new windows: one with numbered quadrants (the GUI window), and one displaying the view of one of the cameras. Use you mouse to click on the quadrant that corresponds to the camera view. Repeat until all four cameras have been matched to the quadrants. |

# The program should now connect to the cameras, and will open two new windows: one with numbered quadrants (the GUI window), and one displaying the view of one of the cameras. Use you mouse to click on the quadrant that corresponds to the camera view. Repeat until all four cameras have been matched to the quadrants. |

||

# Make sure the GUI window is selected, and press Enter. |

# Make sure the GUI window is selected, and press Enter. |

||

| Line 42: | Line 112: | ||

# Make sure the GUI window is selected, then hit Enter. |

# Make sure the GUI window is selected, then hit Enter. |

||

# Remove any calibration patterns, and hit Enter. The program should now be running. |

# Remove any calibration patterns, and hit Enter. The program should now be running. |

||

# When you turn on the robots, they will be in '''sleep''' mode. Use the '''wake''' command to start them. |

|||

==Camera Calibration== |

|||

=== Theory === |

|||

The purpose of camera calibration is to map from pixel coordinates in the camera's image to coordinates on the floor. |

|||

We assume that the camera can be modeled with the pinhole model; all rays of light from the floor pass through a point and are projected onto the camera's image. |

|||

Essentially we are mapping points from one plane (the image) to another (the floor) through a point (the pinhole). [http://en.wikipedia.org/wiki/Homography Homography] is the mathematical term for this type of mapping when the camera has little distortion. |

|||

Let <math>(x,y)</math> be the coordinates of a point on the floor plane and <math>(u,v)</math> be a point on the camera image plane, and <math>\lambda</math> be a scalar. |

|||

We use homogenous coordinates to represent the transformation from the image plane to the world plane as: |

|||

<math>\begin{bmatrix} \lambda x \\ \lambda y \\ \lambda \end{bmatrix} |

|||

= \begin{bmatrix} |

|||

h_{11} & h_{12} & h_{13} \\ |

|||

h_{21} & h_{22} & h_{23} \\ |

|||

h_{31} & h_{32} & 1 \\ |

|||

\end{bmatrix} |

|||

\begin{bmatrix} |

|||

u \\ v \\ 1 \end{bmatrix} |

|||

</math>. |

|||

Suppose we have a group of points on the floor <math>(x_1,y_1),\cdots,(x_N,y_N)</math> and their corresponding image coordinates are <math>(u_1,v_1),\cdots,(u_N,v_N)</math>. Using <math>(u_i,v_i)</math> and <math>(x_i,y_i)</math>, we need to figure out <math>h_{ij}</math>. We follow the procedures in Section 5.2 in [[Media:Image_Formation_and Camera_Calibration.pdf | ''Image Formation and Camera Calibration'']] and obtain the following equation: |

|||

<math> \underbrace{\begin{bmatrix} |

|||

u_1& v_1 & 1& 0 &0 &0& -u_1x_1&-v_1x_1 \\ |

|||

0 &0 &0& u_1& v_1 & 1& -u_1y_1&-v_1y_1 \\ |

|||

\vdots& & \vdots&&\vdots&&\vdots& \\ |

|||

u_N& v_N & 1& 0 &0 &0& -u_Nx_N&-v_Nx_N \\ |

|||

0 &0 &0& u_N& v_N & 1& -u_Ny_N&-v_Ny_N \\\end{bmatrix}}_M |

|||

\underbrace{\begin{bmatrix} |

|||

h_{11} \\ h_{12} \\ h_{13} \\h_{21} \\ h_{22} \\ h_{23}\\h_{31} \\ h_{32}\end{bmatrix}}_H=\underbrace{\begin{bmatrix} |

|||

x_1 \\ y_1 \\ x_2 \\y_2 \\ \vdots \\x_N \\ y_N\end{bmatrix}}_C. |

|||

</math> |

|||

Since there are 8 parameters (<math>h_{ij}</math>) to obtain, we need at least four points on the floor, i.e. <math>N\geq 4</math>. Once we obtain M and C from the point coordinates in the image and on the floor, it is easy to compute H (e.g. in matlab, use <math>M \backslash C</math>). Putting the obtained <math>H</math> into the matrix form, we obtain the homography from <math>(u,v)</math> to <math>(x,y)</math>. Then given any image coordinate <math>(\bar u, \bar v)</math>, we can compute its corresponding floor coordinate <math>(\bar x,\bar y)</math> according to the homography relationship above. Do not forget to divide the scaling factor <math>\lambda</math>. |

|||

Note that the above homography 8-parameter calibration works well when the distortion of the camera is not noticeable. If the camera distortion is significant, advanced calibration techniques, such as the [http://www.vision.caltech.edu/bouguetj/calib_doc/ matlab calibration toolbox], must be used. |

|||

For our webcams, we tried the matlab calibration toolbox and found that the distortion coefficients are fairly small. Therefore, we choose the homography method to calibrate our cameras. This will make the calibration matrix and the computation a lot simpler than the full matlab calibration, yet still yields sufficiently good answers. |

|||

=== Calibration Procedures === |

|||

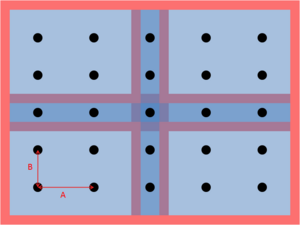

The calibration routine for the vision localization system uses 9 equally-spaced points per camera to find a mapping from the image coordinates to the real-world coordinates. The field of view of the four cameras must overlap the center horizontal and vertical dots that form a '+' shape. The center dot should be seen by all four cameras. The size of the dots is not significant as the system utilizes the center of mass of each dot to determine the "center" of the dot. |

|||

[[Image:machine_vision_calibration.png|300px|thumb|left|Twenty-five points are used to calibrate the cameras. The field of view must overlap the dots that form a '+'.]] |

|||

<br clear = 'all'> |

|||

To calibrate: |

|||

# Place the 25 equally spaced dots on the testbed. The dots should be well distributed throughout the entire testbed (don't bunch the dots all up in a small area) following the image above. |

|||

#* RGB Vision System: It will be necessary to use LED 'dots' or lights rather than black spots, as the RGB vision will not pick up the black spots, rather point sources of light |

|||

# Start the program, and type in 'y' at the prompt that asks whether or not you wish to calibrate. |

|||

# Enter the horizontal spacing, measured in millimeters (parameter A in the diagram) and vertical spacing, in mm, (parameter B in the diagram) when prompted. These numbers are recorded in the file <tt>calibration_dot_spacing.txt</tt>. |

|||

# The GUI window will then display a thresholded image and the console will display the number of dots seen by each camera. Adjust the threshold values with the '+' and '-' keys until each camera sees only the nine dots being used to calibrate the camera. |

|||

# Select the GUI window and hit Enter. |

|||

# Remove the dots. |

|||

# Select the GUI window and hit Enter. |

|||

The program should now be running. Your calibration information will be recorded in <tt>Quadrant0.txt, Quadrant1.txt, Quadrant2.txt, Quadrant3.txt,</tt> and <tt>calibration_dot_spacing.txt</tt>. If you choose not to recalibrate your cameras next time, the data in these files will be used to generate the calibration matrices. |

|||

* When calibrating, the dots should be raised to the same height as the patterns on the robots. It is possible to calibrate one camera at a time using only 9 dots by placing the dots under one camera at a time and running the calibration routine four times, making a copy of the <tt>Quadrant_.txt</tt> file generated by the calibration routine for that camera (the other three files will be garbage) each time so that it will not be overwritten. You can then compile and copy the four good files back into the project directory. |

|||

=== Calibration Results === |

|||

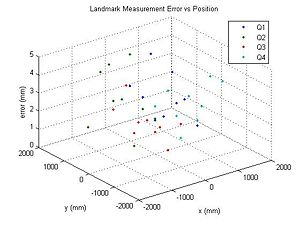

Using the 8-parameter homography calibration, the maximum calibration error is around 5mm, which is around 2 pixels error. |

|||

[[Image:HB_calibration_error.jpg|300px|thumb|left|The absolute calibration error of the twenty-five landmarkers on the ground. Different quadrants are denoted by different colors.]] |

|||

<br clear = 'all'> |

|||

We also attached below the matlab calibration results for the intrinsic parameters of one of the cameras (notice the fairly small distortion coefficients): |

|||

% Intrinsic Camera Parameters |

|||

% |

|||

% This script file can be directly executed under Matlab to recover the camera intrinsic and extrinsic parameters. |

|||

% IMPORTANT: This file contains neither the structure of the calibration objects nor the image coordinates of the calibration points. |

|||

% All those complementary variables are saved in the complete matlab data file Calib_Results.mat. |

|||

% For more information regarding the calibration model visit http://www.vision.caltech.edu/bouguetj/calib_doc/ |

|||

%-- Focal length: |

|||

fc = [ 835.070614323296470 ; 776.358109604829560 ]; |

|||

%-- Principal point: |

|||

cc = [ 448.722941671177130 ; 333.157042750110920 ]; |

|||

%-- Skew coefficient: |

|||

alpha_c = 0.000000000000000; |

|||

%-- Distortion coefficients: |

|||

'''kc = [ 0.000000000000000 ; -0.030646660052499 ; 0.000000000000000 ; 0.000000000000000 ; 0.000000000000000 ];''' |

|||

==Pausing== |

==Pausing== |

||

| Line 48: | Line 200: | ||

==Using the Command Console== |

==Using the Command Console== |

||

To enter the command mode, hit 'c'. When in the command mode, the main loop is not running; the command mode must be exited to resume. Type 'exit' to exit the command mode and resume the main loop. |

To enter the command mode, hit 'c'. When in the command mode, the main loop is not running; the command mode must be exited to resume. Type 'exit' to exit the command mode and resume the main loop. |

||

'''Note: There is no guarantee that all of the robots will receive the command, due to packet loss. It is advisable to send the command out multiple times to make sure the robots all receive it. You can use the arrow keys to find previously sent messages.''' |

|||

Enter commands in the following syntax: |

Enter commands in the following syntax: |

||

| Line 53: | Line 207: | ||

<tt><command name> <target ID> <parameter 1> <parameter 2> ... <parameter N></tt> |

<tt><command name> <target ID> <parameter 1> <parameter 2> ... <parameter N></tt> |

||

'''To |

'''To send the command to all robots, use ID 0. To send the command to a specific robot, use that robot's ID.''' |

||

You can also enter multiple commands at a time: |

You can also enter multiple commands at a time: |

||

<tt>sleep |

<tt>sleep 1 sleep 2 wake 4 goto 0 100 -100 deadb 0 150</tt> |

||

===Commands=== |

===Commands=== |

||

{| class="wikitable" border="3" |

{| class="wikitable" border="3" |

||

| Line 64: | Line 218: | ||

! Command !! Description !! Parameters !! Example |

! Command !! Description !! Parameters !! Example |

||

|- |

|- |

||

| sleep || The robot will stop moving and stop sending data || <tt>sleep <ID></tt> || <tt> sleep 0 </tt> |

| sleep || The robot will stop moving and stop sending data. || <tt>sleep <ID></tt> || <tt> sleep 0 </tt> |

||

|- |

|- |

||

| wake || The robot will wake from sleep and resume moving and sending data || <tt>wake <ID></tt> || <tt> wake 0 </tt> |

| wake || The robot will wake from sleep and resume moving and sending data || <tt>wake <ID></tt> || <tt> wake 0 </tt> |

||

| Line 71: | Line 225: | ||

|- |

|- |

||

| goto || Similar to <tt>goal</tt>, but only changes <tt><Ix></tt> and <tt><Iy></tt>. || <tt>goto <ID> <Ix> <Iy> </tt> || <tt> goto 0 100 300</tt> |

| goto || Similar to <tt>goal</tt>, but only changes <tt><Ix></tt> and <tt><Iy></tt>. || <tt>goto <ID> <Ix> <Iy> </tt> || <tt> goto 0 100 300</tt> |

||

|- |

|||

| deadb || Change the robot motor deadband. The motors will only move for <br>speeds (wheel ticks per second) faster than this. This removes some jittering at low speeds. || <tt>deadb <ID> <velocity> </tt> || <tt> deadb 0 150</tt> |

|||

|- |

|||

| egain || Change the estimator gains. || <tt>egain <ID> <KP> <KI> <Gamma> </tt> || <tt> egain 0 0.7 0.1 0.05</tt> |

|||

|- |

|||

| cgain || Change the motion controller gains. || <tt>cgain <ID> <KIx> <KIy> <KIxx> <KIxy> <KIyy> </tt> || <tt> cgain 0 2 2 0.001 0.001 0.001</tt> |

|||

|- |

|||

| commr || Change the communication radius distance.<br>The agent will discard any packets from other agents further away than this distance. || <tt>commr <ID> <distance> </tt> || <tt> commr 0 1500</tt> |

|||

|- |

|||

| speed || Change the maximum allowed speed for the robot. || <tt>speed <ID> <speed> </tt> || <tt> speed 0 300</tt> |

|||

|- |

|||

| safed || Change the obstacle avoidance threshold. || <tt>speed <ID> <distance> </tt> || <tt> safed 0 200</tt> |

|||

|- |

|- |

||

|} |

|} |

||

<pre> |

|||

sleep 1.0 sleep |

|||

wake 2.0 wake (resets x_i consensus values) |

|||

goal 3.0 change goal parameters |

|||

goto 4.0 change goal position only (formation is the same) |

|||

deadb 5.0 change motor low-velocity deadband |

|||

egain 6.0 change estimator gains KP,KI,GAMMA |

|||

cgain 7.0 change controller gains |

|||

commr 8.0 change COMMR |

|||

speed 9.0 change MAX_WHEEL_V_TICKS |

|||

safed 10.0 change obstacle avoidance theshold SAFEDIST |

|||

</pre> |

|||

==Exiting the Program== |

==Exiting the Program== |

||

Press ESC to quit the program. |

Press ESC to quit the program. |

||

=Additional Considerations= |

|||

==Calibration== |

|||

The Machine Vision Localization System is a robust system for tracking the locations of e-pucks with rotationally invariant patterns. However, several problems do exist as the transition from the paper "dice" patterns to LED light boards is being made. |

|||

[[Image:machine_vision_calibration.png|300px|thumb|left|Twenty-five points are used to calibrate the cameras.]] |

|||

*The software (Logitech QuickCam) and drivers used for the web cameras feature an auto-exposure and light adjusting algorithm. While this works well for operation under normal light conditions, the change to a darkened arena results in the cameras over-exposing. Consequently, the the individual LEDs in the pattern boards are often hard to distinguish from one another, rendering the machine vision system nonfunctional. |

|||

**The current Logitech software (build 11.5) can only control the auto-exposure of 2 cameras at a time. While the newest software (build 12.0, or LWS 1.0) can control the exposure of 4 cameras at a time, this software has proven non-functional with the machine vision code, rendering the program inoperable. Research is being done to find workarounds to this problem, as control of exposure and light adjustments is key to developing a more robust and stable vision system. Other solutions may be to update all the libraries and SDKs in hope that they can accommodate the newer software. Updating the VideoLibrary and OpenCV libraries may also assist with this problem. |

|||

**Other solutions include physically changing the environment to accommodate the poor low-light sensing of the web cameras. For instance, a possible solution would be to have upward casting lights to "brighten" the environment, or to simply monitor the patterns projected to have a certain brightness to maintain proper camera exposure. |

|||

=Updates and Notes on Changes Made= |

|||

Since the first implementation of the Machine Vision Localization System, several updates have been made to transition the system from the paper "dice" patterns to the LED light boards. |

|||

*As discussed in the [[Indoor_Localization_System#Position_and_Angle|Indoor Localization System Position and Angle entry]], the Machine Vision Localization System generates positional data for each e-puck by determining the center of mass of each identifier, such as the paper "dice" patterns, or the LED light boards. However, with certain (most) configurations of the rotationally invariant patterns, the center of mass of the pattern is '''not''' the center of mass of the e-puck. When using the paper "dice" patterns, this problem was easily circumvented by shifting the patterns such that the center of mass of the patterns did lay over the center of mass of the e-puck. However, with the LED light boards, this is not easily done, and the algorithm must be changed accordingly. This is done by augmenting the preprocessed target classifiers document with additional values to offset the center of mass of the LED light board back to the center of mass of the e-puck. |

|||

**This change was implemented in the RGB Machine Vision Localization System code, which can be downloaded at the beginning of this entry |

|||

[[Category:SwarmRobotProject]] |

|||

Latest revision as of 15:46, 4 May 2010

Project Files

- Vision_system_localization_project.zip (Swarm Consensus Estimation project files with source code)

- RGB_Machine_Vision_Localization_System.zip

- The RGB vision system follows the same setup process as the original Swarm Consensus vision system, so please follow the steps below

- Any specific requirements or changes to the original steps needed for the RGB vision system will be noted in the steps below

Overview

This is a machine vision system that tracks multiple targets and can send out the positions via the serial port. It was developed specifically for the Swarm Robotics project, but can be adapted for other uses. It is based upon the Indoor Localization System, but has several enhancements and bug fixes. Refer to Indoor Localization System for a basic overview of the setup of the system and the workings of the pattern identification algorithm.

Major Enhancements/Changes

- The system will now mark the targets with an overlay and display coordinate data onscreen.

- The serial output is now formatted for the XBee radio using the XBee's API mode with escape characters.

- The calibration routine has been improved, and only needs to be performed once.

- A command interface for sending out commands via the serial port has been added.

- The system will discard targets too close to the edge of the camera frame to prevent misidentification due to clipping.

- The origin of the world coordinate is now in the middle, not the lower left corner.

- The GUI displaying the camera frames is now full sized instead of a thumbnail. However, if your monitor isn't big enough, you can resize them.

- The coordinates generated are now corrected to output with the center of mass of the robot, not of the LED light pattern

Major Bug Fixes

- Two major memory leaks fixed.

- Calibration matrices are now calculated correctly.

- File handling bug that causes an off-by-one error in LoadTargetData() fixed.

Target Patterns

- TrackSysV1_6_20_08.zip (Target patterns and preprocessor)

- For actual images of the target patterns: TrackSysTraining(folder) >> images(folder) >> FinalSet(folder)>>1,2,3,...,24

OpenCV Documentation

Operation

Setting up the Cameras

The cameras are set up and operate as described in the Indoor Localization System entry, but with one change: targets at the border of the camera frame are now discarded. This changes prevents the misidentification of patterns if one or more dots in the pattern fall off the screen, but it also means that there must be enough overlap that when the target is in the dead-zone of one camera, it is picked up by another camera.

The height of the cameras will determine how small your patterns can be before becoming indistinguishable. Changing the height also changes the focus, so be sure to manually adjust the focus of the cameras when the height is changed.

How to Use The System

Camera Setup

The four cameras used were standard Logitech QuickCam Communicate Deluxes. For future use, the videoInput library used is very compatible and works with most capture devices. As measured, the viewing angle (from center) of the Logitech cameras was around 30 degrees (horizontal plane) and 25 degrees (vertical plane).

Before attaching the cameras, several considerations must be made.

1. Choose a desired ALL WHITE area to cover. ***IMPORTANT: If you want to be able to cover an continuous region, the images as seen by the cameras must overlap to ensure a target is at least fully visible in one frame of a camera*** Keep in mind that there is a trade-off between area, and resolution. In addition, the size of the patterns will have to be increased above the threshold of noise.

- RGB Vision System: the floor color is trivial with the RGB vision system. It may be necessary to select a non-reflective/matte floor surface to reduce the number of aberrant reflections from the floor to the vision system

2. Ensure that the cameras are all facing the same direction. As viewed from above, the "top" of the cameras should all be facing the same direction (N/S/E/W). For future use, if these directions must be variable, the image reflection and rotation parameters can be adjusted in software (though this has not been implemented).

3. Try to mount the cameras as "normal" as possible. Although the camera calibration should determine the correct pose information, keeping the lenses of the cameras as normal as possible will reduce the amount of noise at the edges of the images.

Computer Setup

In the current implementation, this system has been developed for a Windows based computer (as restricted by the videoInput library). The system should run in Windows XP or Vista. To setup the computer to develop and run the software, the three required libraries must be installed.

1. Download and install the Logitech QuickCam Communicate Deluxe Webcam Drivers -

Available at \\mcc.northwestern.edu\dfs\me-labs\lims\Swarms\LogitechDrivers.zip or http://www.logitech.com/index.cfm/435/3057&cl=us,en. If you download them from the logitech website you need BOTH versions of the software (qc1150 and lws110). These are available under downloads as "Logitech webcam software with Vid", and "Quickcam v. 11.5". Install qc1150 first, and restart as requested. Install lws110 (Note that you do not need the Vid software), and restart as requested. Device Manager -> Imaging devices should display a list of 4 cameras. For each camera right click, Choose update driver software, Browse my computer, Let me pick from a list, select QuickCam Communicate Deluxe Version: 11.5.0.1145 Hit next to install the drivers. Do this for all 4 cameras before restarting. You now have the new software (for controlling cameras individually), and the old drivers (for working with the vision system.).

2. Install Microsoft Visual Studio Professional (Express Edition is incompatible with some of the code)

3. Download and install Microsoft Windows Platform SDK - http://www.microsoft.com/downloads/details.aspx?FamilyId=0BAF2B35-C656-4969-ACE8-E4C0C0716ADB&displaylang=en

4. Download and install Microsoft DirectX 9+ SDK - http://www.microsoft.com/downloads/details.aspx?FamilyId=572BE8A6-263A-4424-A7FE-69CFF1A5B180&displaylang=en

5. Download and install Intel OpenCV Library - http://sourceforge.net/projects/opencvlibrary/

6. Download and install the videoInput Library - http://muonics.net/school/spring05/videoInput/

7. Download the source code for the vision system here: Vision System Localization Project

The project will probably not compile right after you open it. You will have to put the correct directories into the environment. In Visual C++, go to

Tools>Options>Project and Solutions>VC++ directories

and select Include files in the drop-down menu. Add (the directory paths on your computer may be different depending on your system, and the versions you installed.):

- C:\Program Files\OpenCV\otherlibs\highgui

- C:\Program Files\OpenCV\otherlibs\cvcam\include

- C:\Program Files\OpenCV\cvaux\include

- C:\Program Files\OpenCV\cxcore\include

- C:\Program Files\OpenCV\cv\include

- C:\Program Files\Microsoft SDKs\Windows\v6.1\Include

select Library files from the drop-down menu and add:

- C:\Program Files\Microsoft SDKs\Windows\v6.1\Lib

- C:\Users\LIMS\Documents\Visual Studio 2005\Libraries\videoInput0.1991\videoInputSrcAndDemos\libs\DShow\lib

- C:\Users\LIMS\Documents\Visual Studio 2005\Libraries\videoInput0.1991\compiledLib\compiledByVS2005

- C:\Users\LIMS\Documents\Visual Studio 2005\Libraries\videoInput0.1991\videoInputSrcAndDemos\libs\videoInput

Starting the Program

- Compile and run the program.

- When you first run the program, it will open up a console window and ask you to enter a COM port number. This is the number of the serial port of the Xbee radio that will be used to send out data. Enter the number, and press Enter. (e.g. Enter COM number: 1<enter>)

- Be sure to configure the XBee radio appropriately. You can find instructions here

- The program should now connect to the cameras, and will open two new windows: one with numbered quadrants (the GUI window), and one displaying the view of one of the cameras. Use you mouse to click on the quadrant that corresponds to the camera view. Repeat until all four cameras have been matched to the quadrants.

- Make sure the GUI window is selected, and press Enter.

- Go back to the console window, and you should now see a message asking you if you want to recalibrate your cameras. Press y for yes and n for no, then press Enter. To recalibrate your cameras, see the calibration section.

- The GUI window should now show a thresholded image, and the console will display how many dots the camera sees. Adjust the thresholding parameters (+ and - for black/white, z and x for area) until you are satisfied with the thresholded image.

- Make sure the GUI window is selected, then hit Enter.

- Remove any calibration patterns, and hit Enter. The program should now be running.

- When you turn on the robots, they will be in sleep mode. Use the wake command to start them.

Camera Calibration

Theory

The purpose of camera calibration is to map from pixel coordinates in the camera's image to coordinates on the floor. We assume that the camera can be modeled with the pinhole model; all rays of light from the floor pass through a point and are projected onto the camera's image. Essentially we are mapping points from one plane (the image) to another (the floor) through a point (the pinhole). Homography is the mathematical term for this type of mapping when the camera has little distortion.

Let be the coordinates of a point on the floor plane and be a point on the camera image plane, and be a scalar. We use homogenous coordinates to represent the transformation from the image plane to the world plane as:

.

Suppose we have a group of points on the floor and their corresponding image coordinates are . Using and , we need to figure out . We follow the procedures in Section 5.2 in Image Formation and Camera Calibration and obtain the following equation:

Since there are 8 parameters () to obtain, we need at least four points on the floor, i.e. . Once we obtain M and C from the point coordinates in the image and on the floor, it is easy to compute H (e.g. in matlab, use ). Putting the obtained into the matrix form, we obtain the homography from to . Then given any image coordinate , we can compute its corresponding floor coordinate according to the homography relationship above. Do not forget to divide the scaling factor .

Note that the above homography 8-parameter calibration works well when the distortion of the camera is not noticeable. If the camera distortion is significant, advanced calibration techniques, such as the matlab calibration toolbox, must be used.

For our webcams, we tried the matlab calibration toolbox and found that the distortion coefficients are fairly small. Therefore, we choose the homography method to calibrate our cameras. This will make the calibration matrix and the computation a lot simpler than the full matlab calibration, yet still yields sufficiently good answers.

Calibration Procedures

The calibration routine for the vision localization system uses 9 equally-spaced points per camera to find a mapping from the image coordinates to the real-world coordinates. The field of view of the four cameras must overlap the center horizontal and vertical dots that form a '+' shape. The center dot should be seen by all four cameras. The size of the dots is not significant as the system utilizes the center of mass of each dot to determine the "center" of the dot.

To calibrate:

- Place the 25 equally spaced dots on the testbed. The dots should be well distributed throughout the entire testbed (don't bunch the dots all up in a small area) following the image above.

- RGB Vision System: It will be necessary to use LED 'dots' or lights rather than black spots, as the RGB vision will not pick up the black spots, rather point sources of light

- Start the program, and type in 'y' at the prompt that asks whether or not you wish to calibrate.

- Enter the horizontal spacing, measured in millimeters (parameter A in the diagram) and vertical spacing, in mm, (parameter B in the diagram) when prompted. These numbers are recorded in the file calibration_dot_spacing.txt.

- The GUI window will then display a thresholded image and the console will display the number of dots seen by each camera. Adjust the threshold values with the '+' and '-' keys until each camera sees only the nine dots being used to calibrate the camera.

- Select the GUI window and hit Enter.

- Remove the dots.

- Select the GUI window and hit Enter.

The program should now be running. Your calibration information will be recorded in Quadrant0.txt, Quadrant1.txt, Quadrant2.txt, Quadrant3.txt, and calibration_dot_spacing.txt. If you choose not to recalibrate your cameras next time, the data in these files will be used to generate the calibration matrices.

- When calibrating, the dots should be raised to the same height as the patterns on the robots. It is possible to calibrate one camera at a time using only 9 dots by placing the dots under one camera at a time and running the calibration routine four times, making a copy of the Quadrant_.txt file generated by the calibration routine for that camera (the other three files will be garbage) each time so that it will not be overwritten. You can then compile and copy the four good files back into the project directory.

Calibration Results

Using the 8-parameter homography calibration, the maximum calibration error is around 5mm, which is around 2 pixels error.

We also attached below the matlab calibration results for the intrinsic parameters of one of the cameras (notice the fairly small distortion coefficients):

% Intrinsic Camera Parameters % % This script file can be directly executed under Matlab to recover the camera intrinsic and extrinsic parameters. % IMPORTANT: This file contains neither the structure of the calibration objects nor the image coordinates of the calibration points. % All those complementary variables are saved in the complete matlab data file Calib_Results.mat. % For more information regarding the calibration model visit http://www.vision.caltech.edu/bouguetj/calib_doc/ %-- Focal length: fc = [ 835.070614323296470 ; 776.358109604829560 ]; %-- Principal point: cc = [ 448.722941671177130 ; 333.157042750110920 ]; %-- Skew coefficient: alpha_c = 0.000000000000000; %-- Distortion coefficients: kc = [ 0.000000000000000 ; -0.030646660052499 ; 0.000000000000000 ; 0.000000000000000 ; 0.000000000000000 ];

Pausing

Hit 'p' to pause the program, and hit 'p' again to resume.

Using the Command Console

To enter the command mode, hit 'c'. When in the command mode, the main loop is not running; the command mode must be exited to resume. Type 'exit' to exit the command mode and resume the main loop.

Note: There is no guarantee that all of the robots will receive the command, due to packet loss. It is advisable to send the command out multiple times to make sure the robots all receive it. You can use the arrow keys to find previously sent messages.

Enter commands in the following syntax:

<command name> <target ID> <parameter 1> <parameter 2> ... <parameter N>

To send the command to all robots, use ID 0. To send the command to a specific robot, use that robot's ID.

You can also enter multiple commands at a time:

sleep 1 sleep 2 wake 4 goto 0 100 -100 deadb 0 150

Commands

| Command | Description | Parameters | Example |

|---|---|---|---|

| sleep | The robot will stop moving and stop sending data. | sleep <ID> | sleep 0 |

| wake | The robot will wake from sleep and resume moving and sending data | wake <ID> | wake 0 |

| goal | Change the swarm's goal state. | goal <ID> <Ix> <Iy> <Ixx> <Ixy> <Iyy> | goal 0 100 300 160000 40000 40000 |

| goto | Similar to goal, but only changes <Ix> and <Iy>. | goto <ID> <Ix> <Iy> | goto 0 100 300 |

| deadb | Change the robot motor deadband. The motors will only move for speeds (wheel ticks per second) faster than this. This removes some jittering at low speeds. |

deadb <ID> <velocity> | deadb 0 150 |

| egain | Change the estimator gains. | egain <ID> <KP> <KI> <Gamma> | egain 0 0.7 0.1 0.05 |

| cgain | Change the motion controller gains. | cgain <ID> <KIx> <KIy> <KIxx> <KIxy> <KIyy> | cgain 0 2 2 0.001 0.001 0.001 |

| commr | Change the communication radius distance. The agent will discard any packets from other agents further away than this distance. |

commr <ID> <distance> | commr 0 1500 |

| speed | Change the maximum allowed speed for the robot. | speed <ID> <speed> | speed 0 300 |

| safed | Change the obstacle avoidance threshold. | speed <ID> <distance> | safed 0 200 |

Exiting the Program

Press ESC to quit the program.

Additional Considerations

The Machine Vision Localization System is a robust system for tracking the locations of e-pucks with rotationally invariant patterns. However, several problems do exist as the transition from the paper "dice" patterns to LED light boards is being made.

- The software (Logitech QuickCam) and drivers used for the web cameras feature an auto-exposure and light adjusting algorithm. While this works well for operation under normal light conditions, the change to a darkened arena results in the cameras over-exposing. Consequently, the the individual LEDs in the pattern boards are often hard to distinguish from one another, rendering the machine vision system nonfunctional.

- The current Logitech software (build 11.5) can only control the auto-exposure of 2 cameras at a time. While the newest software (build 12.0, or LWS 1.0) can control the exposure of 4 cameras at a time, this software has proven non-functional with the machine vision code, rendering the program inoperable. Research is being done to find workarounds to this problem, as control of exposure and light adjustments is key to developing a more robust and stable vision system. Other solutions may be to update all the libraries and SDKs in hope that they can accommodate the newer software. Updating the VideoLibrary and OpenCV libraries may also assist with this problem.

- Other solutions include physically changing the environment to accommodate the poor low-light sensing of the web cameras. For instance, a possible solution would be to have upward casting lights to "brighten" the environment, or to simply monitor the patterns projected to have a certain brightness to maintain proper camera exposure.

Updates and Notes on Changes Made

Since the first implementation of the Machine Vision Localization System, several updates have been made to transition the system from the paper "dice" patterns to the LED light boards.

- As discussed in the Indoor Localization System Position and Angle entry, the Machine Vision Localization System generates positional data for each e-puck by determining the center of mass of each identifier, such as the paper "dice" patterns, or the LED light boards. However, with certain (most) configurations of the rotationally invariant patterns, the center of mass of the pattern is not the center of mass of the e-puck. When using the paper "dice" patterns, this problem was easily circumvented by shifting the patterns such that the center of mass of the patterns did lay over the center of mass of the e-puck. However, with the LED light boards, this is not easily done, and the algorithm must be changed accordingly. This is done by augmenting the preprocessed target classifiers document with additional values to offset the center of mass of the LED light board back to the center of mass of the e-puck.

- This change was implemented in the RGB Machine Vision Localization System code, which can be downloaded at the beginning of this entry